Vision and Hearing In Action

ERC Advanced Grant #340113

VHIA studies the fundamentals of audio-visual perception for human-robot interaction

VHIA’s list of publications | Research | Recently Submitted Papers | News

Principal Investigator: Radu Horaud | Duration: 1/2/2014 – 31/1/2019 (five years) | ERC funding: 2,497,000€

Research pages (please click here for a complete list of our research pages)

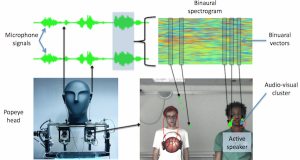

Audio-visual speaker diarization

Audio-visual multiple speaker tracking

EM algorithms for weighted-data clustering

Eye gaze and visual focus of attention

Deep mixture of linear inverse regression (DMLIR)

Separation of time-varying audio mixtures

Supervised sound-source localization

S. Lathuilière, B. Massé, P. Mesejo, and R. Horaud (October 2017). Neural-Network Reinforcement Learning for Audio-Visual Gaze Control in Human-Robot Interaction. Submitted to Pattern Recognition Letters.

R. T. Marriott, A. Pashevich, and R. Horaud (September 2017). Plane-Extraction from Depth-Data Using a Gaussian Mixture Regression Model. Submitted to Pattern Recognition Letters. https://arxiv.org/abs/1710.01925

X. Li, R. Horaud and S. Gannot. (June 2017). Blind Multi-Channel Identification and Equalization for Dereverberation and Noise Reduction based on Convolutive Transfer Function. Submitted to IEEE/ACM Transactions on Audio, Speech, and Language Processing. https://arxiv.org/abs/1706.03652

B. Massé, S. Ba and R. Horaud. (March 2017). Tracking Gaze and Visual Focus of Attention of People Involved in Social Interaction. Submitted to IEEE Transactions on Pattern Analysis and Machine Intelligence. https://arxiv.org/abs/1703.04727

September 2017, novel technology paper award finalist: Yutong Ban (PhD student) and his coauthors were finalists for the Novel Technology Paper Award at the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), September 2017, Vancouver, Canada.

July 2017, paper accepted for publication: X. Li, L. Girin, S. Gannot and R. Horaud. (October 2016). Multiple-Speaker Localization Based on Direct-Path Features and Likelihood Maximization with Spatial Sparsity Regularization. Submitted to IEEE Transactions on Audio, Speech, and Language Processing. https://arxiv.org/abs/1611.01172

June 2017, paper accepted for publication: G. Evangelidis and R. Horaud. Joint Alignment of Multiple Point Sets with Batch and Incremental Expectation-Maximization. IEEE Transactions on Pattern Analysis and Machine Intelligence. https://arxiv.org/abs/1609.01466.

May 2017, ERC PoC grant: The PI and his team were awarded an ERC Proof of Concept grant for the project VHIALab submitted in January 2017.

March 2017, best paper award: Israel Dejene Gebru (PhD student) and his co-authors received the best paper award at IEEE HSCMA’17.

January 2017: R. Horaud was invited to join the editorial board of the IEEE Robotics and Automation Letters as an associate editor.

January 2017, paper accepted for publication: V. Drouard, R. Horaud, A. Deleforge, S. Ba, and G. Evangelidis. (March 2016). Robust Head Pose Estimation Based on Partially-Latent Mixture of Linear Regression. Submitted to IEEE Transactions on Image Processing. http://arxiv.org/abs/1603.09732

December 2016, paper accepted for publication: I. D. Gebru, S. Ba, X. Li and R. Horaud. Audio-Visual Speaker Diarization Based on a Spatiotemporal Bayesian Model. IEEE Transactions on Pattern Analysis and Machine Intelligence. http://arxiv.org/abs/1603.09725

July 2016, paper accepted for publication: X. Li, L. Girin, R. Horaud, S. Gannot. Estimation of the Direct-Path Relative Transfer Function for Supervised Sound-Source Localization. IEEE/ACM Transactions on Audio, Speech, and Language Processing. http://arxiv.org/abs/1509.03205.

July 2016, paper accepted for publication: S. Ba, X. Alameda-Pineda, A. Xompero, R. Horaud. An On-line Variational Bayesian Model for Multi-Person Tracking from Cluttered Scenes. http://arxiv.org/abs/1509.01520

June 2016, paper accepted for publication: R.Horaud, G.Evangelidis, M. Hansard, C. Ménier. An Overview of Range Scanners and Depth Cameras Based on Time-of-Flight Technologies. Machine Vision and Applications. https://hal.inria.fr/hal-01325045.

May 2016, award: A.Deleforge (former PhD student), F. Forbes (collaborator), and R.Horaud received the 2016 Award for Outstanding Contributions in Neural Systems for their paper: “Acoustic Space Learning for Sound-source Separation and Localization on Binaural Manifolds,” International Journal of Neural Systems, 2015, 25:1, 1440003 (21 pages), http://arxiv.org/abs/1402.2683

April 2016, paper accepted for publication: D. Kounnades-Bastian, L. Girin, X. Alameda-Pineda, S. Gannot, R. Horaud. A Variational EM Algorithm for the Separation of Time-Varying Convolutive Audio Mixtures. IEEE/ACM Transaction on Audio, Speech, and Language Processing. http://arxiv.org/abs/1510.04595.

January 2016, paper accepted for publication: I. D. Gebru, X. Alameda-Pineda, F. Forbes, R.Horaud. EM Algorithms for Weighted-Data Clustering with Application to Audio-Visual Scene Analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence. http://arxiv.org/abs/1509.01509.

October 2015, award: Dionyssos Kounades Bastian (PhD student in the PERCEPTION team) received the best student paper award at IEEE WASPAA’15.

September 2015, award: Vincent Drouard (PhD student in the PERCEPTION team) received the best student paper award (2nd place) at IEEE ICIP’15

Project “official” summary (09/2013)

The objective of VHIA is to elaborate a holistic computational paradigm of perception and of perception-action loops. We propose to develop a completely novel twofold approach: (i) learn from mappings between auditory/visual inputs and structured outputs, and from sensorimotor contingencies, and (ii) execute perception-action interaction cycles in the real world with a humanoid robot. VHIA will launch and achieve a unique fine coupling between methodological findings and proof-of-concept implementations using the consumer humanoid NAO manufactured in Europe. The proposed multimodal approach is in strong contrast with current computational paradigms that are based on unimodal biological theories. These theories have hypothesized a modular view of perception, postulating that there are quasi-independent and parallel perceptual pathways in the brain. VHIA takes a radically different view than today’s audiovisual fusion models that rely on clean-speech signals and on accurate frontal-images of faces; These models assume that videos and sounds are recorded with hand-held or head-mounted sensors, and hence there is a human in the loop whose intentions inherently supervise both perception and interaction. Our approach deeply contradicts the belief that complex and expensive humanoids (often manufactured in Japan) are required to implement research ideas. VHIA’s methodological program addresses extremely difficult issues, such as how to build a joint audiovisual space from heterogeneous, noisy, ambiguous and physically different visual and auditory stimuli, how to properly model seamless interaction based on perception and action, how to deal with high-dimensional input data, and how to achieve robust and efficient human-humanoid communication tasks through a well-thought tradeoff between offline training and online execution. VHIA bets on the high-risk idea that in the next decades robot technology will have a considerable social and economical impact and that there will be millions of humanoids, in our homes, schools and offices, which will be able to naturally communicate with us.

Perception team (current and past) members involved in VHIA

Radu Horaud (PI), Laurent Girin (professor), Xavi Alameda-Pineda (researcher), Georgios Evangelidis (researcher, 2014-2015), Sileye Ba (researcher, 2014-2016), Soraya Arias (robotics engineer), Israel Dejene-Gebru (PhD student, 2013-2017), Vincent Drouard (PhD student, 2014-2017), Benoit Massé (PhD student), Stéphane Lathuilière (PhD student), Dionyssos Kounades-Bastian (PhD student, 2014-2017), Yutong Ban (PhD studend), Xiaofei Li (researcher), Pablo Mesejo-Santiago (PhD student), Guillaume Sarrazin (software development and robotics engineer), Bastien Mourgue (signal processing and software development engineer), Guillaume Delorme (PhD student), Sylvain Guy (PhD student).

Collaborators

Florence Forbes (INRIA), Sharon Gannot (Bar Ilan University), Xavi Alameda-Pineda (Trento University), Antoine Deleforge (INRIA Rennes), Olivier Alata (Mines Saint-Etienne), Christine Evers (Imperial College), Yoav Schechner (Technion).

All our articles are available for download on HAL (open archive)