The Kinovis Multiple-Speaker Tracking Dataset Data | pdf from arXiv | download | reference The Kinovis multiple speaker tracking (Kinovis-MST) datasets contain live acoustic recordings of multiple moving speakers in a reverberant environment. The data were recorded in the Kinovis multiple-camera laboratory at INRIA Grenoble Rhône-Alpes. The room size is 10.2 m × 9.9 m × 5.6 …

Category: Data

Mar 15

The AVDIAR Dataset

AVDIAR: A Dataset for Audio-Visual Diarization Publicly available dataset in conjunction with paper “Audio-Visual Speaker Diarization Based on Spatiotemporal Bayesian Fusion“ The AVDIAR dataset is only available for non-commercial use Citation Introduction Recording Setup Annotations Data Download Introduction AVDIAR (Audio-Visual Diarization) is a dataset dedicated to the audio-visual analysis of conversational scenes. Publicly available …

Oct 08

The AVTRACK-1 Dataset

We release the AVTRACK-1 dataset: audio-visual recordings used in the paper [1]. This dataset can only be used for scientific purposes. The dataset is fully annotated with the image locations of the active speakers and the other people present in the video. The annotated locations correspond to bounding boxes. Each person is given a unique anonymous …

Sep 03

The NAL dataset

The NAL (NAO Audio Localization) dataset is composed of sounds recorded with a NAO v5 robot. General description of the recording setup and environment: NAO is placed on the floor in different rooms and different positions in each room. The four microphones are embedded in the robot head and they are at 45 cm above the floor. …

Oct 14

MIXCAM Dataset

Mixcam data-set consists of five scenes that are captured by a three-sensor camera including a low-resolution (176×144) Time-of-flight (range) camera and two high-resolution (1624×1224) color cameras (TOF+Stereo). The tof sensor is the SR4000 model by Mesa Imaging. The inter-sensor syncronization is very accurate owing to a specific hardware developed by 4D View Solution. We also …

Feb 12

The NAR dataset

NAR is a dataset of audio recordings made with the humanoid robot Nao in real world conditions for sound recognition benchmarking. All the recordings were collected using the robot’s microphone and thus have the following characteristics: recorded with low-quality sensors (300 Hz – 18 kHz bandpass) suffering from typical fan noise from the robot’s internal hardware recorded in mutiple real domestic environments (no special acoustic charateristics, reverberations, presence …

Dec 19

The AVASM dataset

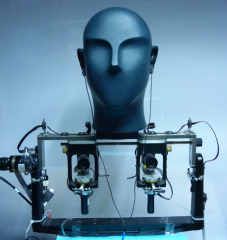

The AVASM dataset is a set of audio-visual recordings made the dummy head POPEYE in real world conditions. It consists of binaural recordings of a single static sound source emitting white noise or speech from different positions. The sound source is a loud-speaker equipped with a visual target manually placed at different positions around the system. Each …

Dec 19

The CAMIL dataset

The CAMIL dataset is a unique set of audio recordings made with the robot POPEYE. The dataset was gathered in order to investigate audio-motor contingencies from a computational point of view and experiment new auditory models and techniques for Computational Auditory Scene Analysis. The version 0.1 of the dataset was built in November 2010, and …

Dec 15

The RAVEL Dataset

The RAVEL dataset (Robots with Auditory and Visual Abilities) contains typical scenarios useful in the development and benchmark of robot-human interaction (HRI) systems. The RAVEL dataset is freely accessible for research purposes and for non-commercial applications. The dataset is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License. RAVEL website for download: http://perception.inrialpes.fr/datasets/Ravel/ Please cite to the following …

- 1

- 2