Current projects (2020-):

- H2020 ICT project SPRING — Socially Pertinent Robots in Gerontological Healhcare (2020-2023)

- ANR JCJC (young investigator project awarded to Xavier Alameda-Pineda) ML3RI — Multi-Modal Multi-person Low-Level Learning for Robot Interaction (2020-2023)

- Audio-Visual Machine Perception and Interaction for Companion Robots, ANR MIAI chair, Université Grenoble Alpes (2020-2023)

Completed projects:

- Marie-Curie Research Training Network VISIONTRAIN (2005-2009)

- ICT-FP6 project POP (2006-2009)

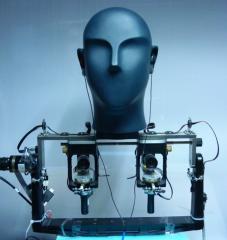

- ICT-FP7 project HUMAVIPS (2010-2013)

- Reconstruction with depth and color cameras for 3D stereoscopic consumer displays. Project funded by Samsung Electronics (2010-2013).

- ANR-BLANC MIXCAM project (2014-2016)

- ICT-FP7 project EARS (2014-2017)

- Multi-modal speaker localization and tracking. Project funded by Samsung Electronics (2016-2017)

- ERC Advanced Grant VHIA (2014-2019)

- ERC Proof of Concept VHIALab (2018-2019)

Academic partners:

- The Czech Technical University in Prague (2005-2013 and 2020-2023)

- Herriot-Watt University, Edinburgh, UK (2020-2023)

- Hôpital Broca, Paris, France (2020-2023)

- University of Sheffield (2006-2009)

- University of Coimbra (2006-2009)

- Bielefeld University (2010-2013)

- IDIAP, Martigny (2010-2013)

- The Technion (2005-2015)

- Queen Mary University London (2012-2016)

- Bar Ilan University (2013-)

- University of Trento (2014-)

- University of Cordoba (2014-)

- University of Patras (2013-2016)

- Ben Gourion University (2014-2016)

- Imperial College (2014-)

- Erlangen-Nuremberg University (2014-2016)

- Humboldt University (2014-2016)

- Polytechnic University of Bucharest (2014)

Industrial partners:

- ERM Automatismes, Carpentras, France (2020-2023)

- PAL Robotics, Barcelona, Spain (2020-2023)

- Samsung Digital Media and Communications R&D Center, Seoul, Korea (2016-2017)

- Samsung Advanced Institute of Technology, Seoul, Korea (2010-2013)

- 4D View Solutions, Grenoble, France (2007-)

- Aldebaran Robotics, Paris, France (2010-2013)

- SoftBank Robotics Europe (2014-2016)