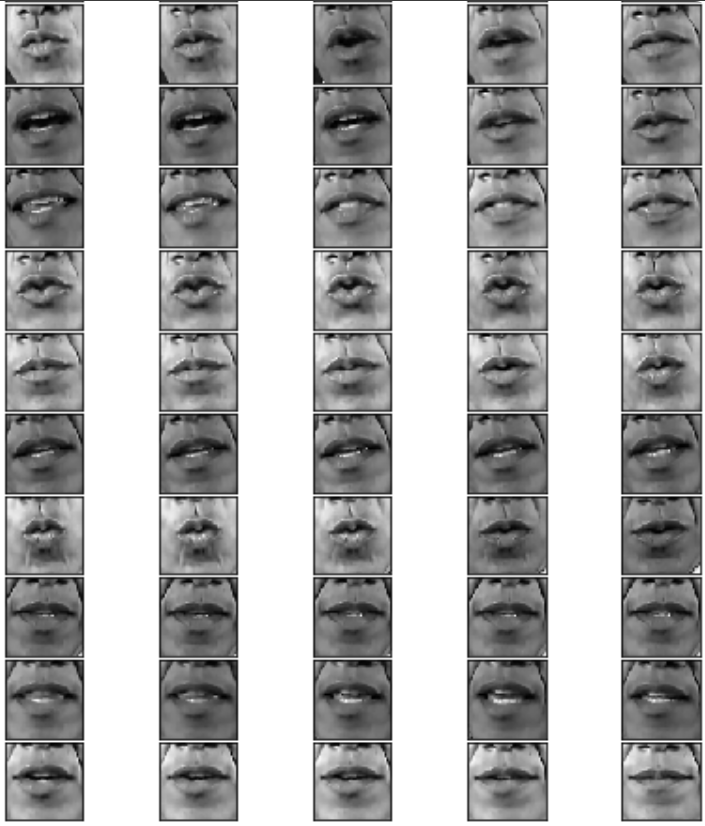

This figure shows a few frames from a sample video. Only the last three rows contain frames that are well-aligned. The rest of the frames need to be aligned.

In many audio-visual applications, e.g., speech enhancement and speech recognition, it is desirable to have aligned images of the mouth region such that a deep neural network can extract reliable visual features. Indeed, the quality of the extracted visual features impacts the performance of audio-visual based applications. In reality, however, a speaker’s face is constantly moving, and as such, the mouth regions are not aligned along an image sequence. For this reason, it is not straightforward to learn good features that characterize mouth deformations that are caused by speech and to use these features for audio-visual fusion.

In this master project, we propose to investigate methods to perform mouth-region alignment prior to learning visual features for audio-visual speech enhancement. The basic idea is to extract 3D facial landmarks and to use these landmarks to estimate the inter-image rigid motions. The rigid-motion parameters thus estimated can subsequently be used to compute a set of homographies that map the mouth regions onto a frontal view of the speaker’s face, such that they are aligned. As an application, we will consider the problem of audio-visual speech enhancement. We already developed an audio-visual speech enhancement method that is based on a variational auto-encoder (VAE) and which expects aligned images of a speaking face [1]. It will, therefore, be possible to thoroughly assess the performance of the proposed face alignment method by measuring its effect on the currently available audio-visual speech enhancement algorithm.

Start and end dates: February/March 2020 for a duration of up to six months.

Reference:

[1] M. Sadeghi, S. Leglaive, X. Alameda-Pineda, L. Girin, and R. Horaud, “Audio-visual Speech Enhancement Using Conditional Variational Auto-Encoder,” August 2019.

Contact: Radu.Horaud@inria.fr