Xavier Alameda-Pineda and his collaborators from the University of Trento received the 2020 Nicolas D. Georganas Best Paper Award, that recognizes the most significant work in ACM Transactions on Multimedia Computing, Communications, and Applications (ACM TOMM) in a given calendar year: Increasing image memorability with neural style transfer, vol. 15 Issue 2, January 2019 by A. Siarohin, …

Category: News

Nov 22

Paper published in IEEE Transactions on PAMI

The paper Variotional Bayesian Inference for Audio-Visual Tracking of Multiple Speakers has been published in the IEEE Transactions on Pattern Analysis and Machine Intelligence (journal with one of the highest impact score in the category computational intelligence). This work is part of the Ph.D. thesis of Yutong Ban, now with the Computer Science and Artificial …

Nov 07

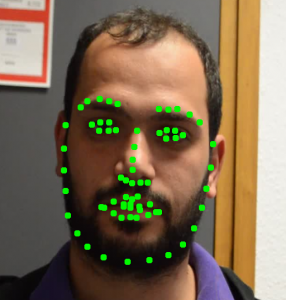

[Closed] Master Internship on face alignment for audio-visual speech enhancement

In many audio-visual applications, e.g., speech enhancement and speech recognition, it is desirable to have aligned images of the mouth region such that a deep neural network can extract reliable visual features. Indeed, the quality of the extracted visual features impacts the performance of audio-visual based applications. In reality, however, a speaker’s face is constantly …

Nov 05

Master Internship on Deep Bayesian Filtering

In signal processing and in computer vision, some of the most powerful tracking methods are based on the Kalman filter. The latter belongs to the unsupervised class of machine learning techniques and may well be viewed either as the simplest dynamic Bayesian network (DBN) or as a state-space model: it recursively predicts over time a …

Oct 08

[Closed] Master Internship on Robust Deep Regression

Topic: In this Master thesis we address the problem of how to robustly train a ConvNet for regression, or deep robust regression [1,2]. Traditionally, deep regression employs the L2 loss function [3], known to be sensitive to outliers, i.e. samples that either lie at an abnormal distance away from the majority of the training samples, …

Oct 08

[Closed] Researcher on Deep and Reinforcement Learning for Robotics

Starting Date: February 1st, 2020. Funding: The H2020 ICT SPRING Project Contact Point: Xavier Alameda-Pineda Duration: From 2 and up to 4 years. To apply: https://jobs.inria.fr/public/classic/fr/offres/2019-02083 General Context: SPRING – Socially Pertinent Robots in Gerontological Healthcare – is a 4-year R&D project fully funded by the European Comission under the H2020 framework. SPRING aims to develop …

Oct 08

[Closed] Engineer on Deep Learning and Cloud Computing

Starting Date:November 1st, 2019 – February 1st, 2020. Funding: The H2020 ICT SPRING Project Contact Point: Xavier Alameda-Pineda Duration: 2 years and up to 4 years. To apply: https://jobs.inria.fr/public/classic/fr/offres/2019-02081 General Context: SPRING – Socially Pertinent Robots in Gerontological Healthcare – is a 4-year R&D project fully funded by the European Comission under the H2020 framework. SPRING …

Oct 08

[Closed] Engineer on Deep Learning and Robotics

Starting Date: November 1st, 2019 – February 1st, 2020. Duration: 2 years and up to 4 years. Funding: The H2020 ICT SPRING Project Contact Point: Xavier Alameda-Pineda To apply: https://jobs.inria.fr/public/classic/fr/offres/2019-02082 General Context: SPRING – Socially Pertinent Robots in Gerontological Healthcare – is a 4-year R&D project fully funded by the European Comission under the H2020 framework. …

Sep 27

H2020 Project SPRING awarded!

The Perception team is happy to announce that a new project has been awarded by the European Union under the H2020-ICT program. The main objective of SPRING (Socially Pertinent Robots in Gerontological Healthcare) is the development of socially assistive robots with the capacity of performing multimodal multiple-person interaction and open-domain dialogue. In more detail: The scientific objective of …