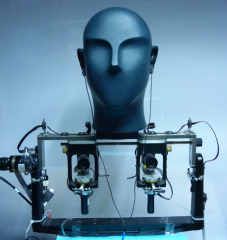

The AVASM dataset is a set of audio-visual recordings made the dummy head POPEYE in real world conditions. It consists of binaural recordings of a single static sound source emitting white noise or speech from different positions. The sound source is a loud-speaker equipped with a visual target manually placed at different positions around the system. Each recording is annotated with the pixel position of the emitter in the left camera image. These associations allow to learn a mapping from the space of binaural auditory cues to the 2D image plane, thus allowing for precise sound source localization in 2D. A realistic audio-visual test scenario of a person counting in front of the setup is also provided to evaluate the performance of learning methods on realistic data. All recording sessions were held at INRIA Grenoble Rhône-Alpes and lead by Antoine Deleforge.

The AVASM dataset is freely accessible for scientific research purposes and for non-commercial applications.

-

- Dowload training data

- Download test loudspeaker data

- Dowload person-live scenarios: