Author's posts

Oct 28

(closed) Master Project: Robot Walking in a Piecewise Planar World

Short Description Companion robots, such as the humanoid robot NAO manufactured by Aldebaran Robotics, should be able to move on non-planar surfaces, such as going up- or downstairs. This implies that the robot is able to perceive the floor and to extract piecewise planar surfaces, such as the stairs, independently of the floor type. This …

Oct 08

Model-Based Clustering Using Color and Depth Information, by Olivier Alata

Tuesday, October 14, 2014, 11:00 am to 12:00 am, room F107, INRIA Montbonnot Seminar by Olivier Alata, Université Jean Monnet, Saint-Etienne Abstract. Access to the 3D images at a reasonable frame rate is widespread now, thanks to the recent advances in low cost depth sensors as well as the efficient methods to compute 3D …

Oct 07

(closed) Master Project: Audio-Visual Event Localization with the Humanoid Robot NAO

Short Descrption The PERCEPTION team investigates the computational principles underlying human-robot interaction. Under this broad topic, this master project will investigate the use of computer vision and audio signal processing methods enabling a robot to localize events that are both seen and heard, such as a group of people engaged in a conversation or in an informal …

Jun 28

(closed) Fully Funded PhD Positions to Start in September/October 2014

July 16th, 2014: All the positions were provided, the call for applicants is closed. The PERCEPTION group is seeking for PhD students to develop advanced methods for activity recognition and human-robot interaction based on joint visual and auditory processing. We are particularly interested in students with strong background in computer vision, statistical machine learning, and auditory …

Jun 24

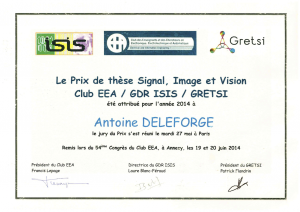

Antoine Deleforge Received the “Signal, Image, Vision” Best PhD Thesis Award!

Antoine Deleforge, Master and Ph.D student in the PERCEPTION team (2009-2013) received the 2014 “Signal, Image, Vision” best PhD thesis award for his thesis entitled “Acoustic Space Mapping: A Machine Learning Approach to Sound Source Separation and Localization”. For more information about Antoine’s work, please click here. Since January 2014 Antoine is a postdoctoral researcher at …

Apr 10

The gtde MATLAB toolbox

The gtde toolbox is a set of MATLAB functions for localizing sound sources from time delay estimates. From the sound recorded on the microphones of any non-coplanar arbitrarily-shaped microphone array, the toolbox can be used to robustly recover the position of the sound source and the time delay estimates associated to it. This piece of …

Feb 28

Recent Advances in Human-centric Video Understanding, by Manuel-Jesus Marin-Jimenez

Thursday, March 20, 2014, 2:30 to 3:30 pm, room F107, INRIA Montbonnot Seminar by Manuel-Jesus Marin-Jimenez, University of Cordoba, Spain In this seminar I will present recent advances in the field of visual content analysis focused on people. In particular, we are interested in understanding videos containing people-to-people interaction. Firstly, we will approach a …

Jan 07

Complex scene perception: contextual estimation of dynamic interacting variables, by Sileye Ba

Monday, January 13, 2014, 2:00 to 3:00 pm, room F107, INRIA Montbonnot Seminar by Sileye Ba, RN3D Innovation Lab, Marseille During these last years researchers have conducted research about complex scene perception. Studying the perception scenes require modelling multiple interacting variables. Computer vision and machine learning have led to significant advances with models integrating multimodal observations …

Dec 16

HORIZON 2020: The HUMAVIPS Projects Paves the Way

A Horizon Magazine article about “Integrating Smart Robots into Society” refers to the recently terminated EU HUMAVIPS project (2010-2013), coordinated by the PERCEPTION team. The article stresses the role of cognition in human-robot interaction and refers to HUMAVIPS as one of the FP7 projects that has paved the way towards the concept of audio-visual robotics.

Dec 15

The RAVEL Dataset

The RAVEL dataset (Robots with Auditory and Visual Abilities) contains typical scenarios useful in the development and benchmark of robot-human interaction (HRI) systems. The RAVEL dataset is freely accessible for research purposes and for non-commercial applications. The dataset is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License. RAVEL website for download: http://perception.inrialpes.fr/datasets/Ravel/ Please cite to the following …