I. Dataset of a human performing daily life activities in a scene with occlusions (containing synchronized RGB-D and motion capture data)

We provide a dataset containing RGB-D and motion capture data on the positions of body parts for a person performing daily life activities in a scene with occlusions. The aim of this dataset is to provide a novel benchmark for the evaluation of different human body pose estimation systems in challenging situations.

It is the first RGB-D dataset that provides ground truth data for different body parts of a person moving in a scene with occlusions. The challenge that presents this benchmark is to test the ability of a given tracking system to handle severe occlusions and resume tracking when the person is again fully visible.

II. Description

The dataset consists of 12 RGB-D video sequences of a person moving in front of a Kinect in a scene with obstacles.In addition to the depth and RGB image, each sequence contains the synchronized ground truth data obtained from a Qualisys motion capture system with 8 infra-red cameras. The ground truth contains the 3D position of the following markers:

- Head (1 marker)

- Shoulder (2 markers for left and right)

- Elbow (2)

- Wrist (2)

- Neck (1)

- Torso (1)

- Hip (2)

- Knee (2)

- Foot (2)

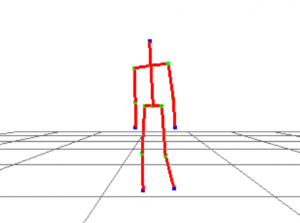

The marker positions are displayed on the following image:

III. Citation

If you use our dataset in your work, please refer to the following publication:

@InProceedings {dib2015,

author = “Abdallah DIB, François CHARPILLET”,

title = “Pose Estimation For A Partially Observable Human Body From RGB-D Cameras”,

journal = “Proc. of the International Conference on Intelligent Robot Systems (IROS)”,

year = “2015”

}

IV. License

The data and the code source of this benchmark is distributed under the Creative Commons 3.0 Attribution License.

V. Sample images

Bellow are some screenshots from the dataset, that show a person moving in a scene with severe occlusions generated by scene objects.

VI. Data structure

Each of the synchronized sequences contains the following directories:

- rgb: contains the rgb images of the Kinect

- depth: contains the depth images of the Kinect

Also, each sequence directory has the groundTruth.txt file that contains the ground truth data for each couple of RGB/depth images returned by the kinect. The last file in each sequence directory is named rgb_depth_pos.txt that contains the RGB and depth timestamp for the Kinect camera.

Temporal synchronization between the ground truth and the kinect is already done for you, so no action is required from your side. Synchronized images and ground truth data are saved in the groundTruth.txt file.

The transformation matrix between the Qualisys and the Kinect is represented by the following homogeneous matrix (translation in millimeters):

| 0.8954580151977138 | 0.5103237861353643 | -0.0508805743920705 | -4507.420151100658 |

|---|---|---|---|

| -0.02123062193180733 | -0.04180508717005156 | -1.052294108532836 | 1019.225762091929 |

| -0.5224123887620519 | 0.8888625338840541 | -0.08742371986806169 | 3469.696756240115 |

| 0 | 0 | 0 | 1 |

VII. Visualisation code

We provide the C++ code to parse and display the markers’ positions on the RGB images. This code parses the groundTruth.txt file and extracts the markers’ positions and then projects these markers to the corresponding RGB image.

Only OpenCV (version > 2) is needed to compile the code. The code was tested on Ubuntu and Mac-os and it uses Cmake for cross compilation.

To build and run the visualization program, you have to install OpenCV (version > 2) on your system. Then navigate to the directory of the visualization source code and run the following commands:

- mkdir buid

- cd build

- cmake ..

- make

To play a benchmark sequence run the following command in a terminal: ./skViewer pathToSequenceDirectory

where pathToSequenceDirectory is the directory path where the sequence is saved.

Bellow is an output example of the skeleton viewer that displays marker positions as green circles on the RGB image.