Jannis Klinkenberg, PhD student from RWTH Aachen, is visiting our team this summer. He will present his work next Tuesday, July 19th.

Title: Locality-Aware Scheduling in OpenMP

Abstract:

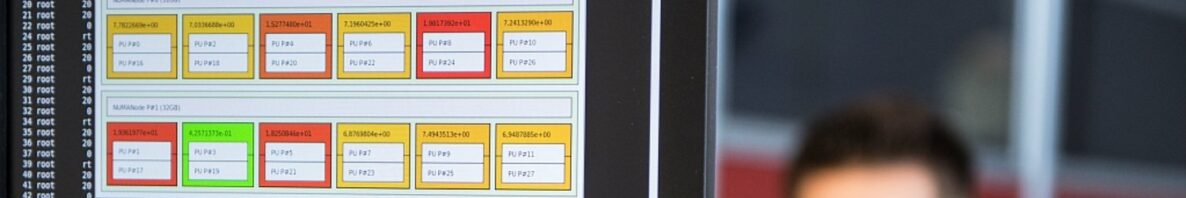

Today’s HPC systems typically consist of modern shared-memory NUMA machines each comprising two or more multi-core processor packages with local memory. On such systems, affinity of data to computation is crucial for achieving high performance. OpenMP 3.0 introduced support for task-parallel programs in 2008 and has continued to extend its applicability and expressiveness. However, the ability to support data affinity of tasks was missing. In this talk, I will present several approaches for task-to-data affinity that combine locality-aware task distribution and task stealing.

Further, the heterogeneity of HPC systems in the Top500 tends to increase such that shared-memory machines additionally feature 2 or more GPUs. Programs usually start on host and offload computational-intensive parts to GPUs to speed up the overall execution time. Consequently, locality between the offloading threads, data used by the computation, and the GPUs can have a significant impact on performance.

The slides are available here.