C. Gavoille defended his PhD at Inria on January 15th 2024. The thesis focused on performance prediction for ARM architectures.

Dec 04

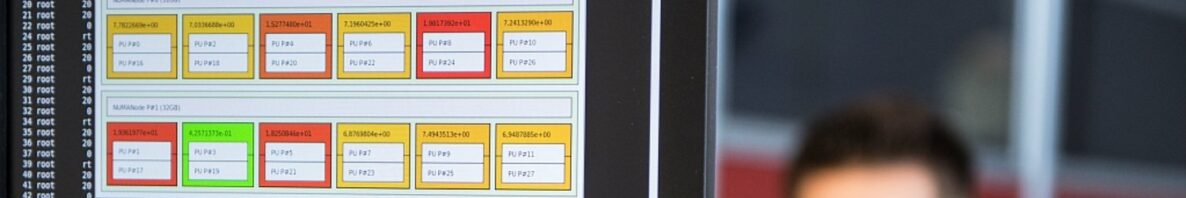

hwloc 2.10.0 published

A new major release hwloc 2.10.0 was published.

v2.10.0 is a quite a big release with lots of improvements.

Most of them focus on heterogeneous memory (including CXL)

with the concept of “Tiers” to group similar kinds of NUMA nodes.

But there’s also better support for various CPUs (NVIDIA Grace,

LoongArch, AMD, Zhaoxin). And command-line tools have a lot

of new features. Netloc is also finally removed since many people

seem unaware of its death several years ago.

v2.10 is likely the last major release before the new v3.0

appears (hopefully early 2024). v3.0 will slightly break the API

and ABI, remove support from obsolete hardware, etc.

Additional v2.10.x stable releases (and even v2.11.x if necessary)

will be published in parallel to the v3.0 development and early

releases.

Oct 16

Talk by Louis Peyrondet

On October, 23rd 2023, Louis Peyrondet, 2nd year student at ENSEIRB-MATMECA, will present us the work he has done during his internship last summer, under the supervision of Francieli Boito and Luan Teylo.

Title: A decentralized implementation of the IO-Sets method for I/O scheduling in HPC

Abstract: On large HPC infrastructures, the parallel file system (PFS) is shared by all concurrent applications. That causes interference, as I/O-intensive applications may slow down the I/O operations of others. Among the solutions proposed to mitigate this problem, I/O scheduling focuses on deciding, over time, which applications can do I/O operations and how they should share the available I/O bandwidth. In the Tadaam team of the Inria Center of the University of Bordeaux, an I/O scheduling method, called IO-Sets, was recently proposed and provided good results. However, the implementation of such algorithms in practice is complicated because of the need for global control, which typically involves a centralized scheduler that becomes a bottleneck at large scale. The goal of this internship was to create and study a decentralized implementation of IO-Sets inspired by multiple access control protocols from networks. For that, a proof-of-concept was developed in order to first evaluate the merits of this idea and to tune its parameters. This talk will present the results obtained during this internship and perspectives of future work.

May 30

Open PhD position

Dec 01

Call for founding members for the Scotch Consortium

Thirty years, to the day, after the first line of code of Scotch was written, we launch the process of creation of an open consortium around it.

Scotch is now widely used, either within in-house or third-party software, both in academic and industrial contexts. Some of our users, who decided to made Scotch one of the building blocks of their strategic technologies and assets, may require strong assurance about the durability of Scotch and its ability to match their specific current and future needs. Such needs may not all be addressed within the context of the scientific roadmap of the software.

We believe that a consortium, bringing together organizations interested in furthering Scotch, is the best solution to address these issues. Unlike a community, a consortium allows sharing the governance between all of its Members. It also allows every Member to participate in the software roadmap, and to get adequate support. It ensures Scotch stays permanently maintained, and available to the worldwide community under a free/libre software license.

The Scotch Consortium is planned to be created in June 2023, when enough prospective members have declared their interest and made a commitment.

If you are interested in joining the Scotch Consortium, please find below:

– The Preparation document for the Scotch Consortium, which explains the purpose and operations of the planned consortium, including member levels and fees;

– A model of commitment letter.

Contact: francois.pellegrini@inria.fr

Nov 30

B. Goglin talks about CXL on Dec 6th

Brice Goglin will give talk about the Compute Express Link (CXL) on December 6th.

Title: CXL? What’s this buzzword that was everywhere at SC22?

Abstract: The Compute Express Link is a new interconnect that allows to share different kinds of memory between CPUs and accelerators with cache coherence. CXL-compatible CPUs and memory expansion devices are coming to the market now. We’ll see how those will change the memory subsystem by allowing memory pooling in disaggregated datacenter and what it may mean to HPC.

Nov 18

P. Swartvagher defends his PhD on November 29th

Philippe Swartvagher will defend his PhD entitled “On the Interactions between HPC Task-based Runtime Systems and Communication Libraries” at LaBRI on November 29th.

The jury will be composed of:

– M. Denis Barthou, Professor – Bordeaux INP (President)

– M. George Bosilca, Assistant Professor – University of Tennessee (Examiner)

– M. Alexandre Denis, Researcher – Inria, University of Bordeaux (Examiner, co-advisor)

– M. Emmanuel Jeannot, Research Director – Inria, University of Bordeaux (Director)

– M. Arnaud Legrand, Research Director – CNRS, University Grenoble Alpes (Reviewer)

– Mme Didem Unat, Associate Professor – Koç University (Reviewer)

Sep 22

Talk by Robin Boezennec

On October, 11th, Robin Boezennec, PhD Student in TADaaM team, will presents us the work he has done during his interneship.

On October, 11th, Robin Boezennec, PhD Student in TADaaM team, will presents us the work he has done during his interneship.

Title: Stochastic execution time modelling to improve scheduling performance in high performance computing

Abstract:

Current batch schedulers are based on the use of a user-provided execution time estimate. It is known that this estimate is almost always wrong. One direction of research is therefore to try to accurately predict the execution time of tasks, in particular via the analysis of their code. However, this approach has never succeeded. We assume that exact prediction of execution times is too complicated to be profitable, if not impossible. We will therefore consider execution times as random variables, use statistics to qualify them, and incorporate this knowledge into the batch scheduler. This presentation will present the first results obtained during a pre-thesis internship.

Aug 29

Talk by Luan Teylo

On September, 2nd, , post-doc in our team, will present his recent work, described in a paper accepted at Cluster 2022.

Title: The role of storage target allocation in applications’ I/O performance with BeeGFS

Abstract:

Parallel file systems are at the core of HPC I/O infrastructures. Those systems minimize the I/O time of applications by separating files into fixed-size chunks and distributing them across multiple storage targets. Therefore, the I/O performance experienced with a PFS is directly linked to the capacity of retrieving these chunks in parallel. In this work, we conduct an in-depth evaluation of the impact of the stripe count (the number of targets used for striping) on the write performance of BeeGFS, one of the most popular parallel file systems today. We consider different network configurations and show the fundamental role played by this parameter, in addition to the number of compute nodes, processes and storage targets. Through a rigorous experimental evaluation, we directly contradict conclusions from related work. Notably, we show that sharing I/O targets does not lead to performance degradation and that applications should use as many storage targets as possible. Our recommendations have the potential to significantly improve the overall write performance of BeeGFS deployments, and also provide valuable information for future work on storage target allocation and stripe count tuning.