Yutong Ban, Xiaofei Li, Xavier Alameda-Pineda, Laurent Girin and Radu Horaud

IEEE International Conference on Acoustics, Speech, and Signal Processing, May 2018, Calgary, Canada

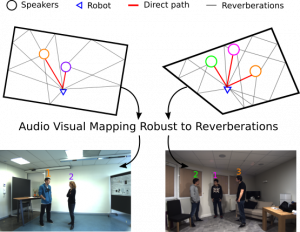

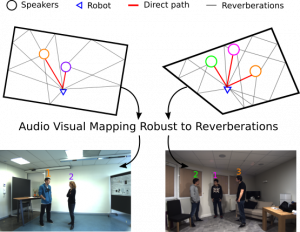

| Abstract. Multiple speaker tracking is a crucial task for many applications. In real-world scenario, the complementarity between auditory and visual data opens the door to track people outside the system’s visual field of view. However, desired systems must be robust to the changes of the acoustic environmental conditions. We investigate how to jointly exploit state-of-the-art auditory localization techniques with very recent advances on probabilistic inference for multi-speaker tracking. Our experiments demonstrate that the performance of the proposed system is not affected by changes in the acoustics. |

|

Selected Results on the AVDIAR Dataset: The blue regions are outside the visual field of view.

Video 1P |

Video 2P |

Acknowledgement

Funding from the European Union FP7 ERC Advanced Grant VHIA (#340113) is greatly acknowledged.

| Results | Acknowledgements