A variance modeling framework based on variational autoencoders for speech enhancement

Simon Leglaive, Laurent Girin, Radu Horaud

IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Aalborg, Denmark, September 2018

Article | Supporting document | Bibtex | Slides | Code | Audio examples | Acknowledgement

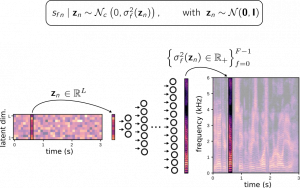

| In this paper we address the problem of enhancing speech signals in noisy mixtures using a source separation approach. We explore the use of neural networks as an alternative to a popular speech variance model based on supervised non-negative matrix factorization (NMF). More precisely, we use a variational autoencoder as a speaker-independent supervised generative speech model, highlighting the conceptual similarities that this approach shares with its NMF-based counterpart. In order to be free of generalization issues regarding the noisy recording environments, we follow the approach of having a supervised model only for the target speech signal, the noise model being based on unsupervised NMF. We develop a Monte Carlo expectation-maximization algorithm for inferring the latent variables in the variational autoencoder and estimating the unsupervised model parameters. Experiments show that the proposed method outperforms a semi-supervised NMF baseline and a state-of-the-art fully supervised deep learning approach. |

|

The proposed method is compared with the two following reference methods:

- A semi-supervised baseline using non-negative matrix factorization (denoted by “NMF baseline”);

- The fully supervised deep learning approach proposed in [1] (denoted by “Fully supervised deep learning approach”). We used the code provided by the authors at https://github.com/yongxuUSTC/sednn

The rank of the speech model for the NMF baseline and the latent-space dimension for the proposed method are both equal to 64.

Noisy speech signals were created at a 0 dB signal-to-noise ratio.

[1] Y. Xu et al., “A regression approach to speech enhancement based on deep neural networks”, IEEE Transactions on Audio, Speech and Language Processing, 23(1), 7-19. 2015.

| Environment | Noisy speech | Clean speech | Enhanced speech | ||

| Semi-supervised NMF baseline | Fully supervised deep learning approach | Proposed method | |||

| Living room | |||||

| University restaurant | |||||

| Kitchen | |||||

| Terrace of a cafe | |||||

| Meeting | |||||

| Subway | |||||

| Subway station | |||||

| City park | |||||

| River | |||||

| Office cafeteria | |||||

| Town square | |||||

| Traffic intersection | |||||

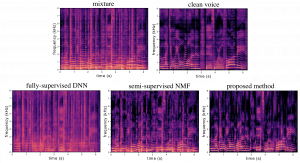

Bonus experiment on music

In this experiment, we test the ability of all the previously compared methods to separate singing voice from the accompaniment in a piece of music. The accompaniment is considered as noise. Note that all models have been trained on speech and not singing voice.

The fully supervised deep learning approach [1] performs the worst because music is not really one of the noise types that the neural network has seen during the training phase. This example justifies the interest of semi-supervised methods and shows interesting generalization properties of the proposed method.

| Mixture: |

| Original | Semi-supervised NMF baseline | Fully supervised deep learning approach | Proposed method | |

| Singing voice | ||||

| Accompaniment | Not provided by the algorithm |

This work was supported by the ERC Advanced Grant VHIA #340113.