Publications | Videos | The NAO Robot

|

|

Abstract. In this research we address the problem of audio-visual speaker detection. We introduce an online system working on the humanoid robot NAO. The scene is perceived with two cameras and two microphones. A multimodal Gaussian Mixture Model fuses the information extracted from the auditory and visual sensors. The system is implemented on top of a platform-independent middleware and it is able to process the information online (17 visual frames per second). A detailed method description and the system implementation are provided, with special emphasis on the online processing issues that must be addressed, and the proposed solutions. Experimental validation is done over five different scenarios, with no special lighting, nor special acoustic conditions, leading to good results.

Publications

1.Xavier Alameda-Pineda, Vasil Khalidov, Radu Horaud, and Florence Forbes. Finding Audio-Visual Events in Informal Social Gatherings. ACM/IEEE International Conference on Multimodal Interaction (ICMI’11), Alicante, Spain, 2011, 247-254. Outstanding Paper Award.

2. Jordi Sanchez-Riera, Xavier Alameda-Pineda, Johannes Wienke,Antoine Deleforge, Soraya Arias, Jan Cech, Sebastian Wrede and Radu Horaud. Online Multimodal Speaker Detection for Humanoid Robots. IEEE International Conference on Humanoid Robotics (Humanoids’12), Osaka, Japan. 2012.

3. Jan Cech, Ravi Mittal, Antoine Deleforge, Jordi Sanchez-Riera, Xavier Alameda-Pineda, Radu Horaud. Active-Speaker Detection and Localization with Microphones and Cameras Embedded into a Robotic Head. IEEE International Conference on Humanoid Robots (Humanoids’13), Atlanta, Georgia, USA, 2013.

3. Jan Cech, Ravi Mittal, Antoine Deleforge, Jordi Sanchez-Riera, Xavier Alameda-Pineda, Radu Horaud. Active-Speaker Detection and Localization with Microphones and Cameras Embedded into a Robotic Head. IEEE International Conference on Humanoid Robots (Humanoids’13), Atlanta, Georgia, USA, 2013.

Xavier Alameda-Pineda ; Radu Horaud. Vision-Guided Robot Hearing. International Journal of Robotics Research, SAGE, 2014

Xavier Alameda-Pineda ; Radu Horaud. Vision-Guided Robot Hearing. International Journal of Robotics Research, SAGE, 2014

Videos

This videos illustrates the basic methodology that was developed in [1] and that associates visual and auditory events using a mixture model. In practice, the method is able to dynamically detect the number of events and to localize them.

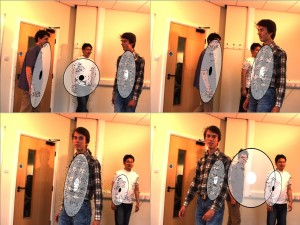

This video shows the audiovisual speaker localization principle based on 3D face localization using a stereoscopic camera pair and time difference of arrival (TDOA) between two microphones [2]. Left: the scene viewed by the robot; the black circle indicates the detected speaking face. Right: Top view of the scene where the circles correspond to the head positions. Both the videos and the audio track are those recorded with the robot’s cameras and microphones.

This video shows an active and interactive behavior of the robot based on face detection and recognition, and sound localization and word recognition [3]. The robot selects one active speaker and synthesize appropriate behavior.