Variational Bayesian Inference for Audio-Visual Tracking of Multiple Speakers

Yutong Ban, Xavier Alameda-Pineda, Laurent Girin, and Radu Horaud

IEEE Transactions on Pattern Analysis and Machine Intelligence (Early access)

arXiv (full paper with appendixes) | Results | Acknowledgements

Selected Results on the AVDIAR Dataset

|

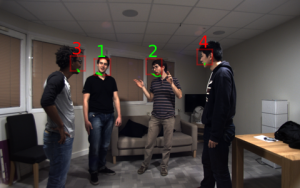

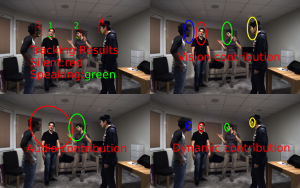

Legend: Top left: tracking results. Bounding box represents the estimated speaker position, green line represents the speaker trajectory. The index represents speaker identity. When speaker identity is red, the speaker is estimated as silent and green indicates speaking. Top right: vision contribution to the tracking. The ellipse represents the covariance correspond to vision contribution. different colors represent different speakers. Bottom left: audio contribution to the tracking. Bottom right: contribution of state dynamics to the tracking. Two settings: Full camera field of view (FFOV): full camera view is used. Partial camera field of view (PFOV): at both sides of the images, vision information are not used, only audio information are available. |

|

Possible incompatibility due to video codec, we suggest to use Chrome for visualization! |

Seq08-3P-S1M1 (FFOV) |

|

Seq13-4P-S2M1 (FFOV) |

|

Seq28-3P-S1M1 (FFOV) |

|

Seq32-4P-S1M1 (FFOV) |

|

Seq03-1P-S0M1 (PFOV) |

|

Seq22-1P-S0M1 (PFOV) |

|

Seq19-2P-S1M1 (PFOV) |

|

Seq20-2P-S1M1 (PFOV) |

Acknowledgements. Funding from the European Union FP7 ERC Advanced Grant VHIA (#340113) is greatly acknowledged.