Compressive sensing has been historically developed and successfully applied on sparse finite-dimensional signals, allowing to recover such signals from far fewer measurements than the ambient dimension. With the maturity of the theory has come the will to apply these paradigms to more general classes of signals, such as low-rank matrices, elements living in a general union of sub-spaces, or even functions. All these generalizations assume that with an a priori that the data lives in (or near) a subset much smaller than the ambient space, it is possible to compress it non-adaptively into a reduced representation while still keeping the essential information about it, and in particular being able to reconstruct it with controlled precision.

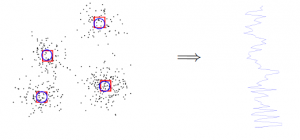

A particularly interesting generalization of compressive sensing is the following: given a data collection, it is a classical assumption that it was drawn from an underlying probability distribution which typically has a low intrinsic complexity for machine learning methods to be able to accurately discover underlying structures of the data. This low complexity assumption makes it possible to apply compressive sensing paradigms to distributions of data. Random measurement operators such as those used in discrete compressed sensing can be defined for such distributions and measures can be computed empirically from data efficiently as empirical moments of the underlying distribution. The compressed representation of the probability distribution can then be used to perform machine learning, such as fitting a parametric model to the data. The benefit of this framework of compressive learning is the gain in terms of memory and privacy compared to classical methods that work directly on data.

We exemplify our framework with the sketched estimation of Gaussian Mixture Models (GMMs). We experimentally show that our approach yields results comparable to the classical Expectation-Maximization (EM) technique while requiring significantly less memory and fewer computations when the number of database elements is large. We report large-scale experiments in speaker verification, where our approach makes it possible to fully exploit a corpus of 1000 hours of speech signal to learn a universal background model at scales computationally inaccessible to EM.

For further details, please refer to the following publications and this talk :