Our activities within the Nokia-Inria joint lab:

Inria challenge LearnNet (Learning Networks) (Jan. 2024 – Dec. 2027)

- Nokia teams: AIRL and NSSR.

- Participants: G. Neglia, A. Nasser.

- Other Inria participating teams: MARACAS, COATI, EPIONE, PREMEDICAL, STATIFY, THOTH, TRIBE.

While machine learning is revolutionizing entire sectors of the digital economy and scientific research, its robust deployment in digital infrastructures raises many questions. The challenge Learning Networks (LearnNet) explores new avenues of research at the intersection of the fields of networks and learning. This challenge has two complementary objectives: rethinking the design of network protocols to serve machine learning applications, and exploring how learning can improve network management. Thus the LearnNet challenge studies the growing entanglement between the challenges of large-scale learning and network design.

Inria challenge SmartNet (AI Methods for Smart Network Management) (Jan. 2024 – Dec. 2027)

- Nokia teams: MLS and NSSR.

- Participants: S. Alouf, A. Sardi.

- Other Inria participating teams: ERMINE, COATI, SPADES, STACK.

The advent of virtualization, combined with the power of AI, has brought new opportunities in network management. To effectively address the challenges that come with this paradigm shift, the SmartNet project is dedicated to exploring the transformative potential of AI methods in enabling smart network management. The project strategically focuses on two key areas: slice provisioning and causal analysis of network malfunctions. The project is dedicated to the development of cutting-edge methods to respond effectively to the growing complexity of networks, particularly in multi-domain scenarios.

ADR “Distributed Learning and Control for Network Analysis” (October 2017 – September 2022)

- Participants: Eitan Altman, Konstantin Avrachenkov, Mandar Datar, Maximilien Dreveton, Alain Jean-Marie, Omar Mohamed.

- Collaborator: Gérard Burnside.

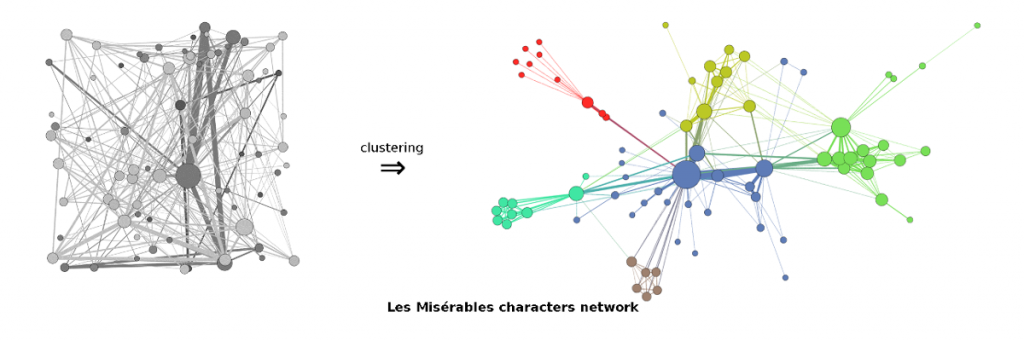

Over the last few years, research in computer science has shifted focus to machine learning methods for the analysis of increasingly large amounts of user data. As the research community has sought to optimize the methods for sparse data and high-dimensional data, more recently new problems have emerged, particularly from a networking perspective that had remained in the periphery. The technical program of this ADR consists of three parts: Distributed machine learning, Multiobjective optimisation as a lexicographic problem, and Use cases / Applications. We address the challenges related to the first part by developing distributed optimization tools that reduce communication overhead, improve the rate of convergence and are scalable. Graph-theoretic tools including spectral analysis, graph partitioning and clustering will be developed. Further, stochastic approximation methods and D-iterations or their combinations will be applied in designing fast online unsupervised, supervised and semi-supervised learning methods.

ADR “Rethinking the Network: Virtualizing Network Functions, from Middleboxes to Applications” (October 2017 – September 2022)

- Participants: Giovanni Neglia (coordinator), Sara Alouf, Gabriele Castellano.

- Collaborator: Fabio Pianese (coordinator), Tianzhu Zhang.

A growing number of network infrastructures are being presently considered for a software-based replacement: these range from fixed and wireless access functions to carrier-grade middle boxes and server functionalities. On the one hand, performance requirements of such applications call for an increased level of software optimization and hardware acceleration. On the other hand, customization and modularity at all layers of the protocol stack are required to support such a wide range of functions. In this scope the ADR focuses on two specific research axes: (1) the design, implementation and evaluation of a modular NFV architecture, and (2) the modelling and management of applications as virtualized network functions. Our interest is in low-latency machine learning prediction services and in particular how the quality of the predictions can be traded off with latency.

ADR “Network Science” (June 2013 – March 2017)

- Participants : Konstantin Avrachenkov (coordinator), Guillaume Huard, Jithin Kazhuthuveettil Sreedharan, Giovanni Neglia.

- Collaborators: Philippe Jacquet (coordinator), Alonso Silva.

“Network Science” aims at understanding the structural properties and the dynamics of various kind of large scale, possibly dynamic, networks in telecommunication (e.g., the Internet, the web graph, peer-to-peer networks), social science (e.g., community of interest, advertisement, recommendation systems), bibliometrics (e.g., citations, co-authors), biology (e.g., spread of an epidemic, protein-protein interactions), and physics. The complex networks encountered in these areas share common properties such as power law degree distribution, small average distances, community structure, etc. Many general questions/applications (e.g., community detection, epidemic spreading, search, anomaly detection) are common in various disciplines and are being analyzed in this ADR “Network Science”. In particular, in the framework of this ADR we were interested in efficient network sampling.