Philippe Swartvagher will work on interactions between high performance communication libraries and task-based runtime systems.

Sep 06

NewMadeleine version 2019-09-06 released

A new stable release of NewMadeleine was published. It contains mostly bug fixes, a new one-sided interface, non-blocking collectives, and optimizations of blocking collectives. NewMadeleine is included in the pm2 package.

Sep 02

Francieli Zanon Boito joins TADaaM as an assistant professor

Francieli Zanon Boito is now assistant professor at University of Bordeaux. Her research interests include storage and parallel I/O for high performance computing and big data.

Jul 08

PADAL Workshop in Bordeaux from Sept 9th to 11th

We are organizing the Fifth Workshop on Programming Abstractions for Data Locality (PADAL’19) at Inria Bordeaux from September 9th to 11th.

Jul 03

Talk by P. Swartvagher on collective communication on July 9th

Philippe Swartvagher will present his internship results about dynamic collective communications for StarPU/NewMadeleine.

Jun 02

Talk by Brice Goglin on Cache Partitioning on June 11th

Brice will present his article published at Cluster 2018 with ENS Lyon, UTK and Georgiatech.

Title: Co-scheduling HPC workloads on cache-partitioned CMP platforms

Co-scheduling techniques are used to improve the throughput of applications on chip multiprocessors (CMP), but sharing resources often generates critical interferences. We focus on the interferences in the

last level of cache (LLC) and use the Cache Allocation Technology (CAT) recently provided by Intel to partition the LLC and give each co-scheduled application their own cache area.

We consider m iterative HPC applications running concurrently and answer the following questions: (i) how to precisely model the behavior of these applications on the cache partitioned platform? and (ii) how many cores and cache fractions should be assigned to each application to maximize the platform efficiency? Here, platform efficiency is defined as maximizing the performance either globally, or as guaranteeing a fixed ratio of iterations per second for each application.

Through extensive experiments using CAT, we demonstrate the impact of cache partitioning when multiple HPC application are co-scheduled onto CMP platforms.

May 22

Talk by Emmanuel Jeannot on May 29th

Emmanuel Jeannot will present us an empirical study he achieved in the domain of process affinity.

Title : Process Affinity, Metrics and Impact on Performance: an Empirical Study

Abstract:

Process placement, also called topology mapping, is a well-known strategy to improve parallel program execution by reducing the communication cost between processes. It requires two inputs: the topology of the target machine and a measure of the affinity between processes. In the literature, the dominant affinity measure is the communication matrix that describes the amount of communication between processes. The goal of this paper is to study the accuracy of the communication matrix as a measure of affinity. We have done an extensive set of tests with two fat-tree machines and a 3d-torus machine to evaluate several hypotheses that are often made in the literature and to discuss their validity. First, we check the correlation between algorithmic metrics and the performance of the application. Then, we check whether a good generic process placement algorithm never degrades performance. And finally, we see whether the structure of the communication matrix can be used to predict gain.

May 22

Scotch 6.0.7 released!

Scotch, the software package for graph/mesh/hypergraph partitioning, graph clustering, and sparse matrix ordering, has a new 6.0.7 release. It extends the target architecture API and adds MeTiS v5 compatibility.

https://gforge.inria.fr/frs/?group_id=248

Apr 30

Talk by Alexandre Denis (TADaaM) on May 2nd

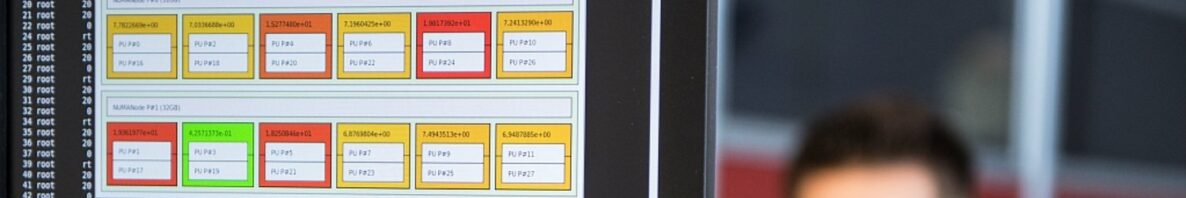

On May 2nd, Alexandre Denis, INRIA researcher in TADaaM team, will present us his recent works on scalabiily of NewMadeleine Communication library.

The talk will take place at 2pm in Room Grace Hopper 2.

———————————————————————————————————————

Title : Scalability of the NewMadeleine Communication Library for Large Numbers of MPI Point-to-Point Requests

Abstract : New kinds of applications with lots of threads or irregular communication patterns which rely a lot on point-to-point MPI communications have emerged. It stresses the MPI library with potentially a lot of simultaneous MPI requests for sending and receiving at the same time. To deal with large numbers of simultaneous requests, the bottleneck lies in two main mechanisms: the tag-matching (the algorithm that matches an incoming packet with a posted receive request), and the progression engine. In this paper, we propose algorithms and implementations that overcome these issues so as to scale up to thousands of requests if needed. In particular our algorithms are able to perform constant-time tag-matching even with any-source and any-tag support. We have implemented these mechanisms in our NewMadeleine communication library. Through micro-benchmarks and computation kernel benchmarks, we demonstrate that our MPI library exhibits better performance than state-of-the-art MPI implementations in cases with many simultaneous requests.

Apr 11

Talk by Valentin Honoré on April 11th

Valentin Honoré will present in this talk his recent works about in situ scheduling. This work has been done with G. Aupy, B. Goglin and B. Raffin (Inria Grenoble)

Title : Modeling High-throughput Applications for in situ Analytics

Abstract : With the goal of performing exascale computing, the importance of I/O management becomes more and more critical to maintain system performance. While the computing capacities of machines are getting higher, the I/O capabilities of systems do not increase as fast. We are able to generate more data but unable to manage them efficiently due to variability of I/O performance. Limiting the requests to the Parallel File System (PFS) becomes necessary. To address this issue, new strategies are being developed such as online in situ analysis. The idea is to overcome the limitations of basic post-mortem data analysis where the data have to be stored on PFS first and processed later. There are several software solutions that allow users to specifically dedicate nodes for analysis of data and distribute the computation tasks over different sets of nodes. Thus far, they rely on a manual resource partitioning and allocation by the user of

tasks (simulations, analysis).

In this work, we propose a memory-constraint modelization for in situ analysis. We use this model to provide different scheduling policies to determine both the number of resources that should be dedicated to analysis functions, and that schedule efficiently these functions. We evaluate them and show the importance of considering memory constraints in the model. Finally, we discuss the different challenges that have to be addressed in order to build automatic tools for in

situ analytics.