Titre de la thèse :

Recommandation temps réels pour des réseaux sociaux sectoriels

Directeur de Thèse: Didier Parigot

http://www-sop.inria.fr/members/Didier.Parigot/

Collaboration avec la société Beepeers (beepeers.com) dont l’activité est la création d’une plateforme collaborative d’outils sociaux pour les entreprises.

Lieux: Inria Sophia-Antipolis

Financement: Bourse universitaire

Introduction

Depuis quelques années les thématiques de gestion de grand volume de donnée (BIG DATA) et des données ouvertes (OPEN DATA) prennent une importance grandissante avec l’essor des réseaux sociaux et de l’internet. En effet par une exploitation ou une analyse des données manipulées il est possible d’extraire de nouvelles informations pertinentes qui permettent de proposer de nouveaux services ou outils. Dans le cadre d’une collaboration entre notre Equipe-Projet Zenith et une très jeune startup Beepeers qui commercialise une plateforme pour le développement de réseaux sociaux sectoriel, nous proposons ce sujet de recherche afin d’enrichir cette plate-forme par de nouveaux services avancés basés sur l’extraction ou l’analyse des données produites par ces réseaux sociaux d’entreprise.

Objectif de la thèse

L’objectif de la thèse sera de proposer et de combiner diverses techniques (algorithmes) d’analyse de donnée afin de proposer des services avancés à la plate-forme Beepeers. La plate-forme Beepeers propose déjà un riche ensemble de fonctionnalité ou services qui produisent une masse d’information ou de donnée qui formera le jeu de donnée initial pour ces futurs travaux de recherche.

Le doctorant devra proposer dans ce cadre applicatif bien ciblé, des algorithmes d’extraction d’information par une combinaison originale des techniques suivantes :

- d’analyse d’usage des utilisateurs ;

- d’extraction de profil utilisateur ;

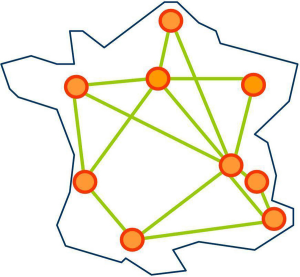

- de propagation ou de diffusion d’information à travers le réseau ou entre différents réseaux sociaux connectés à la plate-forme Beepeers ;

- de recommandation de personne, de service ou d’évènement à l’aide des avis des utilisateurs du réseau (fonctionnalité déjà disponible dans la plate-forme Beepeers) ;

- d’extraction par requête base de donnée continu dans le temps (persistant) sur les sites de données ouvertes disponible et pertinentes pour le réseau sectoriel sous-jacent.

De plus il sera demandé une mise en œuvre originale basée sur une architecture décentralisée orientée services pour permettre un passage à l’échelle des solutions proposées et un déploiement dynamique à ma demande des services avancés.

Contexte de la collaboration

Cette collaboration fait déjà l’objet d’un partenariat fort INRIA-PME à travers la mise en place et le démarrage cette année d’un laboratoire commun (I-lab), dénommé Triton, avec comme programme de R&D l’élaboration d’une architecture innovante pour la plate-forme Beepeers pour le passage à l’échelle. Ce programme de R&D va s’appuyer sur notre expertise en architecture décentralisée orientée services à travers l’utilisation de notre outil SON (Shared Overlay Network). Le doctorant sera donc accompagné dans ses propositions par cette équipe de R&D de ce laboratoire commun Triton et pourra tester et valider ses algorithmes sur les jeux de donnée issus cette nouvelle plate-forme Beepeers développé dans le cadre de l’I-Lab Triton. De plus le doctorant pourra s’appuyer sur l’expertise scientifique de l’équipe-projet Zenith en terme gestion de données scientifiques.

Résultats attendus et profil attendus du candidat

Le candidat devra avoir un gout prononcé par la validation pratique de ses travaux de recherche, et des bonnes aptitudes d’abstraction pour savoir maitriser et appréhender rapidement ces différentes techniques d’analyse ou d’extraction de donnée issu de divers communautés scientifiques (base de donné, analyse d’usage et la programmation distribuée pour la mise en œuvre). Le candidat devra savoir travailler en équipe, en étroite collaboration avec la société Beepeers pour mener à bien ses travaux de recherche. Ces travaux devront trouver rapidement des champs d’application à travers la réalisation concrète et effective de nouveaux services de la plate-forme Beepeers.

Références

Rodriguez, M.A., Neubauer, P., “The Graph Traversal Pattern,” Graph Data Management: Techniques and Applications, eds. S. Sakr, E. Pardede, IGI Global, ISBN:9781613500538, August 2011.

Haesung Lee , Joonhee Kwon, “Efficient Recommender System based on Graph Data for Multimedia Application”

International Journal of Multimedia and Ubiquitous Engineering, Vol. 8, No.4,July, 2013.

Profil recherché

- Ecole d’Ingénieur (BAC + 5) ou Master 2

- Goût du travail en Équipe

- Bon niveau en Anglais (à l’écrit)

Pour candidater

Merci d’envoyer par email et en PDF à l’adresse Didier.Parigot@inria.fr les documents suivants

- CV,

- lettre de motivation ciblée sur le sujet,

- au moins deux lettres de recommandation,

- relevés de notes + liste des enseignements suivis en M2 et en M1.

Miguel Liroz-Gistau has received the best presentation award from the Grid5000 Spring School 2014 in Lyon for his talk on “Using Grid5000 for MapReduce Experiments” (Miguel Liroz-Gistau, Reza Akbarinia, and Patrick Valduriez).

Miguel Liroz-Gistau has received the best presentation award from the Grid5000 Spring School 2014 in Lyon for his talk on “Using Grid5000 for MapReduce Experiments” (Miguel Liroz-Gistau, Reza Akbarinia, and Patrick Valduriez).