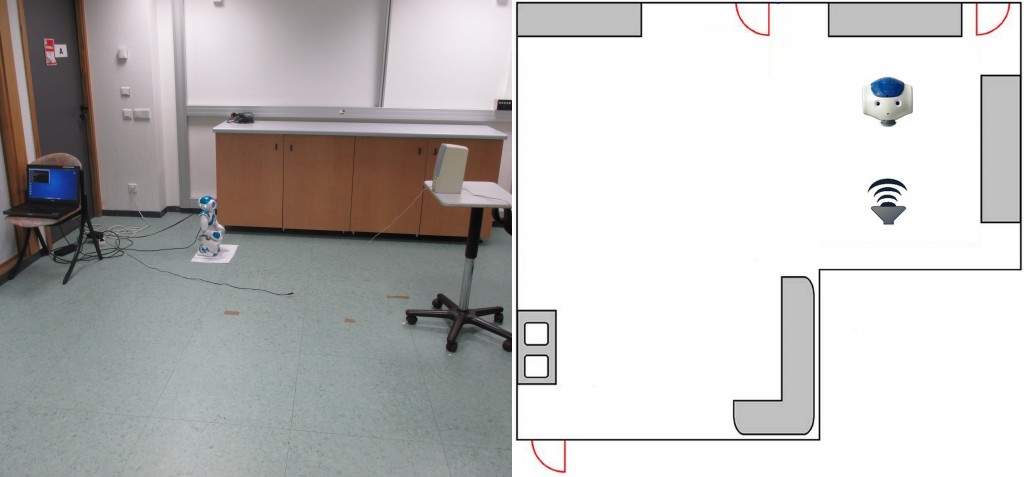

The NAL (NAO Audio Localization) dataset is composed of sounds recorded with a NAO v5 robot.

General description of the recording setup and environment:

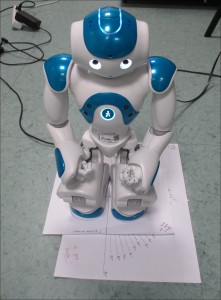

NAO is placed on the floor in different rooms and different positions in each room. The four microphones are embedded in the robot head and they are at 45 cm above the floor. The sound source is a loudspeaker placed on a table top, 80 cm above the floor.

For each robot position, its body orientation varies between -90° to +90° with respect to the source direction. For each body orientation, its head rotates both horizontally, from -119.5° to +119.5° with 10° steps, and vertically, from -38.5° to +29.5° with a 5° steps. For each body-head posture, a sound emitted by the loud-speaker is recorded with the four microphones. Each sound is composed of speech followed by a 1 s white-noise signal. The speech utterances are fromt the TIMIT database.

NAO Video

NAL_dataset1:

NAO is in one room location, with its head in a fixed orientation facing the sound source. The body orientation varies as described above.

NAL_dataset2:

NAO is in the same position as for RawData1. Its body’s orientation varies with a pace of 30°. The sounds are now random so that one sound does not correspond to one head position.

NAL_dataset3:

NAO is in three different positions in the room. Its body’s orientation varies with a pace of 30°. A body orientation of 0° corresponds to NAO facing the wall behind the loudspeaker.

NAL_dataset4:

NAO is in seven different positions in the room. Its body’s orientation varies with a pace of 30°. A body angle of 0° corresponds to NAO facing the source.

GMR

If you want, you can process the data using GMR (Gaussian Mixture Regression). The Matlab code is available here. It will extract an input/output matrix from the raw data (several input options are possible) and then process them.