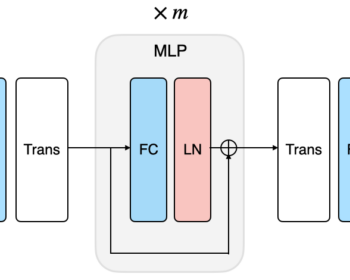

Back to MLP: A Simple Baseline for Human Motion Prediction

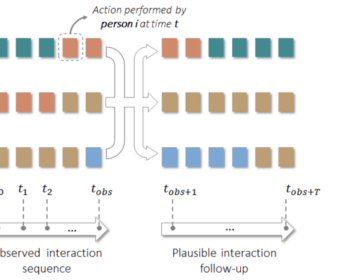

by Wen Guo*, Yuming Du*, Xi Shen, Vincent Lepetit, Xavier Alameda-Pineda, and Francesc Moreno-Noguer IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) 2023, Waikoloa, Hawaii [paper] [code] [HAL] Abstract. This paper tackles the problem of human motion prediction, consisting in forecasting future body poses from historically observed sequences. State-of-the-art…