We release the AVTRACK-1 dataset: audio-visual recordings used in the paper [1].

This dataset can only be used for scientific purposes.

The dataset is fully annotated with the image locations of the active speakers and the other people present in the video. The annotated locations correspond to bounding boxes. Each person is given a unique anonymous identity under the form of a digit (1, 2, 3, …) and this identity is consistent through the entire video. The annotation is done in a semi-automatic fashion, i.e., a human annotated the bounding boxes on a small video segment, and a tracker [2] is used to interpolate the bounding boxes in the remaining frames. Tracking drifts and failures were manually corrected. The active speaker is manually annotated by selecting which person is speaking at each video frame. The dataset also contains sound source locations and upper-body detections as used in our paper [1].

Neither personal nor private data or meta-data are available (first and last names, age, nationality, profession, gender, ethnicity, etc.)

Download

This release contains four audio-visual sequences. The description of the sequences follow:

| Sequence Name | Preview | Download Link | Comments |

| CHAT | [CHAT (46Mb)] | Two then three persons engaged in an informal dialog. The persons wander around and turn their heads towards the active speaker; occasionally two persons speak simultaneously. Moreover the speakers do not always face the camera. | |

| MS | [MS (17Mb)] | Two persons that move around while they are always facing the camera. The persons take speech turns but there is a short overlap. | |

| SS | [SS (16Mb)] | Two persons that are static while they are always facing the camera. Only one person speaks. | |

| SS2 | [SS2 (13Mb)] | Two persons that are static and very close to the camera. They are always facing the camera and take a speech turn with no overlap. |

Download all sequences: [AVTRACK-1 (90Mb) ]

Citation

If you use the dataset for a publication, please cite our ICCVW AVS Paper [1]

Recording Setup

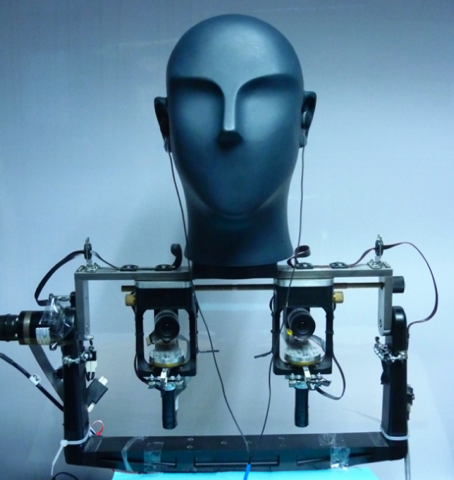

The recording is done with a dummy head equipped with 4 microphones and two cameras. The video from one of the camera is available in this dataset release. The video is recorded at 25 FPS. The Audio is sampled at 44.1Khz.

A great effort is put to make the audio and video synchronized. You can assume the audio start on the first video frame.

Annotation

The annotation file format is a CSV text-file containing one object instance per line. Each line contain 10 values:

,,,,,,,,,

: is the video frame index. Video frame always start from 0.

: is the object id. A unique number is assigned to a particular person appearing through out the video in the ground truth files.

,,, : represents the bounding box region (rectangle) on the video frame.

: is a value contains the detection confidence. This is useful only in the detection files. A value of -999 means that this particular instance is not a useful entry and can be ignored in the other files.

,, : can be ignored and are only here for a future usage. We will provide the 3D position of the object in the future release of the dataset.

Dataset File Structure

The folder structure for each recording is as follows:

README.txt

preview_video.mp4

Data/

audio.wav

video.avi

GroundTruth/

SpeakerBbox.txt

GtBbox.txt

Detection/

upperbody_det.txt

face_det.txt

SSL/

vad.txt

ssl_xx.txt

File descriptions

README.txt: README file.

preview_video.mp4: is a video synchronized with audio for a quick preview.

audio.wav : 4 channel audio file

video.avi : the video file.

SpeakerBbox.txt: the active speaker(s) bounding box

GtBbox.txt: annotated bounding box of people upper-body region

upperbody_det.txt : contains upper-body detections based on [3]

face_det.txt: contains face detections based on [4]

vad.txt: contains the result of voice activity detection per frame. 1 is a voice is detected 0 otherwise.

ssl_xx.txt: contains the sound source localization results. The xx in the file name refer to the window length used to perform localization. For example, if xx = 10, the window length is equivalent to 10 video frames =: 10 * 1/25 = 400ms.

Code and Script

A demo MATLAB script to visualize the annotation is provided with the dataset.

Uses

We used this dataset for speaker diarization task in [1] [5]. Previously, the recording were extensively used in speaker localization [6], [7].

If you would like to be cited here just drop me a note.

References

[1] Israel D Gebru, Silèye Ba, Georgios Evangelidis and Radu Horaud. Tracking the Active Speaker Based on a Joint Audio-Visual Observation Model. In ICCV 2015 workshop on 3D Reconstruction and Understanding with Video and Sound, 2015. Research Page

[2] S.-H. Bae and K.-J. Yoon. Robust online multi-object tracking based on tracklet confidence and online discriminative appearance learning. In Computer Vision and Pattern Recognition (CVPR), 2014.

[3] V. Ferrari, M. Marin-Jimenez, and A. Zisserman. Progressive search space reduction for human pose estimation. In Computer Vision and Pattern Recognition (CVPR), 2008.

[4] Zhu, Xiangxin, and Deva Ramanan. “Face detection, pose estimation, and landmark localization in the wild.” In Computer Vision and Pattern Recognition (CVPR), 2012.

[5] Gebru, I. D., Ba, S., Evangelidis, G., & Horaud, R., Audio-Visual Speech-Turn Detection and Tracking. In Latent Variable Analysis and Signal Separation, LVA/ICA 2015. Research Page

[6] Gebru, I. D., Alameda-Pineda, X., Horaud, R., & Forbes, F., Audio-visual speaker localization via weighted clustering. In IEEE International Workshop on Machine Learning for Signal Processing (MLSP), 2014. Research Page

[7] Gebru, I. D., Alameda-Pineda, X., Forbes, F., & Horaud, R., EM algorithms for weighted-data clustering with application to audio-visual scene analysis. arXiv preprint arXiv:1509.01509. Research Page