Presentation

Core statistics and ML-development

Machine learning for inverse problems

From linear inverse problems to simulation based inference

Bi-level optimization

Reinforcement learning for active k-space sampling

Statistics and causal inference in high dimension

Conditional inference in high dimension

Post-selection inference on image data

Causal inference for population analysis

Machine Learning on spatio-temporal signals

Injecting structural priors with Physics-informed data augmentation

Learning structural priors with self-supervised learning

Revealing spatio-temporal structures in physiological signals

Application domains

MIND is driven by various applications in the data-driven neuroscience fields, which are largely part of the team members’ expertise.

Population modeling, large-scale predictive modeling

Unveiling Cognition Through Population Modelling

Imaging for health in the general population

Proxy measures of brain health

Studying brain age using electrophysiology

Proxy measures of mental health beyond brain aging

Mapping cognition & brain networks

Modeling clinical endpoints

EEG-based modeling of clinical endpoints

MRI-based modeling of clinical endpoints

From brain images and bio-signals to quantitative biology and physics

Statistics and causal inference in high dimension

Statistics is the natural pathway from data to knowledge. Using statistics on brain imaging data involves dealing with high-dimensional data that can induce intensive computation and low statistical power. Besides, statistical models on large-scale data also need to take into account potential confounding effects and heterogeneity. To address these questions the MIND team employ causal modeling and post-selection inference. Conditional and post-hoc inference are rather short-term perspectives, while the potential of causal inference stands as a longer-term endeavour.

Heterogeneous Data & Knowledge Bases

Inferring the relationship between the physiological bases of the human brain and its cognitive functions requires articulating different datasets in terms of their semantics and representation. Examples of these are spatio-temporal brain images; tabular datasets; structured knowledge represented as ontologies; and probabilistic datasets. Developing formalisms that can integrate across all these modalities requires developing formalisms able to represent and efficiently perform computations on high-dimensional datasets as well as to combine hybrid data representations in deterministic and probabilistic settings. We will take on two main angles to achieve this task, the automated inference of cross-dataset features, or coordinated representations; and the use of probabilistic logic for knowledge representation and inference. The probabilistic knowledge representation part is now well advanced with the Neurolang project. It is yet a long-term endeavour. The learning of coordinated representations is less advanced.

Learning coordinated representations

Probabilistic Knowledge Representation

Activity

Results

New results

Bridging Cartesian and non-Cartesian sampling in MRI

MRI is a widely used neuroimaging technique used to probe brain tissues, their structure and provide diagnostic insights on the functional organization as well as the layout of brain vessels. However, MRI relies on an inherently slow imaging process. Reducing acquisition time has been a major challenge in high-resolution MRI and has been successfully addressed by Compressed Sensing (CS) theory. However, most of the Fourier encoding schemes under-sample existing k-space trajectories which unfortunately will never adequately encode all the information necessary. Recently, the Mind team has addressed this crucial issue by proposing the Spreading Projection Algorithm for Rapid K-space sampLING (SPARKLING) for 2D/3D non-Cartesian T2* and susceptibility weighted imaging (SWI) at 3 and 7 Tesla (T) 130, 131, 6.

These advancements have interesting applications in cognitive and clinical neuroscience. However, the original SPARKLING trajectories are prone to off-resonance effects due to susceptibility artifacts. Therefore, in 2023, two years ago, we extended the original SPARKLING methodology along two diretions : and developed the MORE-SPARKLING (Minimized Off-Resonance Effects) approach to correct for these artifacts. The fondamental idea implemented in MORE-SPARKLING is to make different k-space trajectories more homogeneous in time, in the sense that samples supported by different trajectories that are close in k-space must be collected at approximately the same time point. This allows us to mitigate the issue of off-resonance effects (signal void, geometric distortions) without increasing the scan time, as MORE-SPARKLING trajectories have exactly the same duration as their SPARKLING ancestor. This approach was published 5. Additionally, we also extended SPARKLING in another direction, namely the way we sample the center of k-space and proposed the GoLF-SPARKLING version in the same paper 5. The core idea was to reduce the over-sampling of the center of k-space and grid it to collect Cartesian data and make notably the estimation of sensitivity maps in multicoil acquisition easier.

In 2024, we have merged GoLF-SPARKLING with well-established clinically used parallel imaging techniques like GRAPPA and CAIPIRINHA. The latter are mostly limited to Cartesian sampling, and their extension to non-Cartesian sampling is not direct.In 52 we proposed to extend the SPARKLING frameworkto ensure GRAPPA accelerated Cartesian sampling in the k-space center while allowing non-Cartesian sampling in the periphery. We can now enforce Cartesian acceleration in the center of k-space through affine constraints. Using

Accelerated T1-weighted anatomical MRI using GRAPPA-GoLF-SPARKLING. Reconstructed images for the SENIOR cohort collected at NeuroSpin (3T) with MPRAGE sequence at 1mm isotropic resolution for different GS without GRAPPA (b), with GRAPPA 2×1 acceleration (c) and GRAPPA 2×2 acceleration (d). The corresponding acceleration factor (AF) and the scan times are noted at the top and bottom, respectively. The fully sampled Cartesian reference is shown in (a).

SPARKLING for fMRI

Additionally, we have shown that 3D-SPARKLING is a viable imaging technique and good alternative to Echo Planar Imaging for resting-state and task-based fMRI 11 and 12. This is illustrated in Fig. 2 during a retinotopic mapping experiment which consists in mapping the retina to the primary visual cortex. These results have been obtained at a 1mm isotropic resolution both for EPI and SPARKLING acquisitions.

Projection of the BOLD phase maps on the pial surface visualized on the inflated surface for participants V#3 (3D-SPARKLING run first) and V#4 (3D-EPI run first). 3D-SPARKLING yields improved projected BOLD phase maps for V#3 in comparison with 3D-EPI both on raw and spatially smoothed data. Opposite results were found in favor of 3D-EPI in V#4, notably on spatially smoothed data.

Deep Learning for 3D Non-Cartesian MR Image reconstruction

Deep learning and notably unrolled neural netowrk architectures have shown great promise for MRI reconstruction from undersampled data. However, there is a lack of research on validating their performance in the 3D parallel imaging acquisitions with non-Cartesian undersampling. In addition, the artifacts and the resulting image quality depend on the under-sampling pattern. To address this uncharted territory, in 2024 we extended the Non-Cartesian Primal-Dual Network (NC-PDNet) 160, to a 3D multi-coil acquisition setting. We evaluated the impact of channel-specific versus channel-agnostic training configurations and examined the effect of coil compression. Finally, using the publicly available Calgary-Campinas dataset, we benchmarked four distinct non-Cartesian undersampling patterns, with an acceleration factor of six. Our results in Fig. 3 showed that NC-PDNet trained on compressed data with varying input channel numbers achieves an average PSNR of 42.98dB for 1 mm isotropic 3- channel whole-brain 3D reconstruction. With an inference time of 4.95sec and a GPU memory usage of 5.49 GB, our approach demonstrates significant potential for clinical research application.

3D multicoil NCPDNet MRI reconstruction.Reconstruction results of the 90th slice of file e14079s3 P09216.7 from the test set in Calgary-Campinas dataset. The top row shows reconstructions using different methods, while the bottom row displays zoomed-in regions outlined by red frames. Volume-wise PSNR and SSIM scores are indicated at the top of each image.

Fast reconstructions of ultra-high resolution MR data from the 11.7T Iseult scanner

Open-source MR reconstruction tools often fail to efficiently utilize GPU resources and lack support for generalized GRAPPA implementations. Many tools are limited to 2D or 3D reconstruction, and few incorporate advanced techniques such as 2D-CAIPIRINHA, which enhances imaging capabilities. Our new open source gGRAPPA GPU accelerated Python package aims to provide a fast, flexible, open-source tool for generalized GRAPPA/CAIPI reconstruction 45. Using PyTorch, gGRAPPA runs multiple convolutional windows in batch mode to optimize GPU memory usage and accelerate reconstruction times. gGRAPPA achieves up to a 65x speedup over CPU implementations and a 6x speedup compared to non-batched GPU methods, enabling efficient and fast reconstruction of MRI scans. This tool has allowed us to outperform the Siemens image reconstructor, in terms of speed, on the world premiere 11.7T MR system (Iseult scanner available at NeuroSpin) to reconstruct the first in vivo

Ultra-high resolution in vivo brain MRI at 11.7T.Slice of a T2*-weighted 2D GRE scan at 11.7T from a healthy volunteer (approved by the national ethical committee and ANSM, the French medical device regulatory authority), with a resolution of

SNAKE-fMRI: A realistic fMRI data simulator for high resolution functional imaging

Functional Magnetic Resonance Imaging (fMRI) has emerged as a powerful non-invasive tool in neuroscience, enabling neuroscientists to understand human brain functions. However, fMRI acquisition and image reconstruction techniques are complex to optimize and benchmark due to the lack of ground truth that produces absolute and quantitative metrics. Repeating in-vivo experiments may face the issue of limited reproducibility, which is time-consuming and expensive. fMRI simulators have been developed to generate synthetic fMRI images, where brain responses are artificially added to existing or artificial data. However, they don’t simulate the complete MR acquisition process, lack flexibility, integration with post-processing, and computational efficiency. Exploring new acceleration schemes in the acquisition setting and innovative reconstruction methods with these tools is not feasible. To address these unmet needs, in 20204 we have developed SNAKE-fMRI 51, an open-source fMRI simulator that operates in both image and k-space domains to yield realistic synthetic fMRI data. Its flexibility allows us to investigate various acquisition setups regarding SNR and acceleration factors to reach higher spatial and temporal resolution and validate reconstruction methods against those scenarios. Its key principles are summarized in Fig. 5 and some comparative results are shown in Fig. 6.

SNAKE-fMRI simulator.Acquisition method implemented in SNAKE – The case represented is simplified to a 2D Cartesian case (e.g., a projected view of a 3D non-accelerated EPI scheme). Each shot (i.e., a plane in 3D EPI) of the k-space sampling pattern is acquired separately from an on-the-fly simulated volume in the image domain, as shown in the blue frame. The shots are numbered here from 1 to 7. The acquisition is performed in parallel for each tissue type to apply the T2* relaxation model.

Activations maps superimposed on the mean fMRI image in the high-temporal (0.7s) imaging setup using stack-of-spiral sampling and CS reconstruction.Top row: activation maps where a tiume-varying sampling pattern has been used. Bottom row: static sampling pattern, scan and repeat strategy. From left to right: different image reconstruction strategies (COLD, REFINED, WARM), which correspond to different initialization in a framewise CS reconstruction method. Detected activations surviving at

The interest of this simulator will be to provide ground truth data to train deep learning models for fMRI reconstruction in 2025.

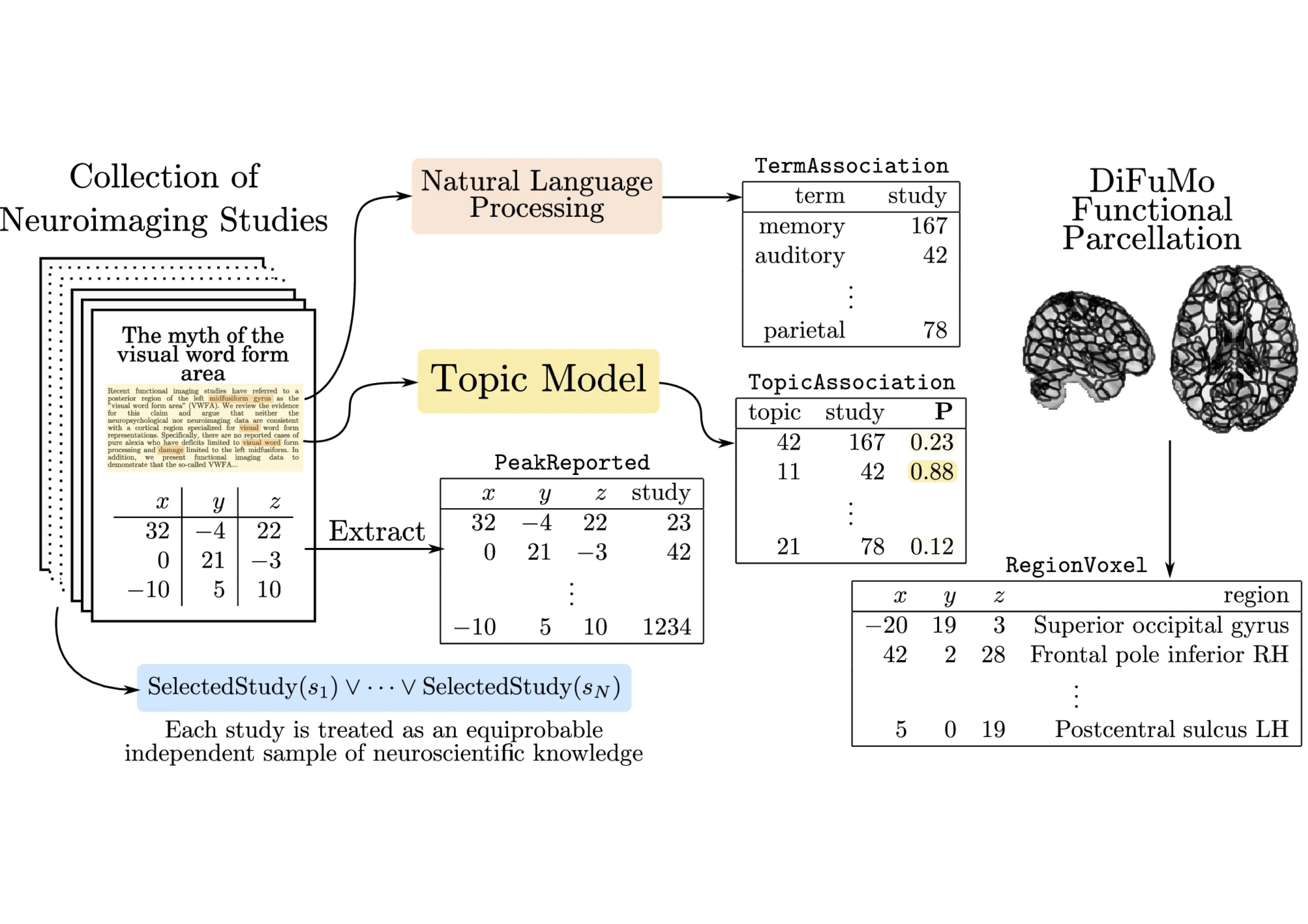

NeuroConText: Contrastive text-to-brain mapping for neuroscientific literature

Hundreds of neuroscientific articles are published annually, highlighting the continuous growth and expansion of knowledge in this field. These contributions from scientists and researchers provide new insights and findings that enhance our understanding of brain functions. Meta-analysis is a statistical tool that combines results from multiple studies to improve the reliability and generalizability of neuroscientific findings. It serves three key purposes: First, it synthesizes information from the literature to build the state of the art by offering a consolidated view of what is currently known. This allows researchers to see where the field stands collectively and highlights consensus or inconsistencies. Second, meta-analysis provides context to interpret new results by comparing novel experimental data with existing patterns in the meta-analysis of the literature, which clarifies how new data align with or deviate from established findings. Third, meta-analysis generates hypotheses on candidate brain regions or relevant cognitive domains by revealing patterns that might be central to a phenomenon.

In 34, we introduced NeuroConText, a novel coordinate-based meta-analysis (CBMA) tool to bridge the three heterogeneous modalities commonly found in neuroscientific studies: text, reported brain activation coordinates, and brain images. NeuroConText uses neuroscientific articles to extract their text and activation coordinates. We benefited from the embeddings of advanced large language models (LLM) for text feature representation. Additionally, we used Kernel Density Estimation (KDE) to reconstruct brain maps from the coordinates 170, 169. To address the high dimensionality of the brain images reconstructed by KDE, we employed the dictionary of functional modes (DiFuMo), a probabilistic atlas that effectively reduces data dimensionality 99.

Then, NeuroConText defines a shared latent space between text and coordinates, using contrastive learning to retrieve the brain activation coordinates corresponding to the input text. NeuroConText can analyze long texts, leveraging the complete information in articles’ text to enhance the accuracy of text-to-brain associations. By incorporating advanced language models like Mistral-7B, it processes complex neuroscientific text and extracts the semantic in the text 125. NeuroConText considerably outperforms existing regression-based state-of-the-art methods NeuroQuery and Text2Brain in associating text with brain activations, achieving a threefold improvement in the retrieval task. To improve our understanding of the internal mechanisms of the NeuroConText model, we also evaluated its ability to reconstruct brain activation contrasts from text latent representations using descriptions of the NeuroVault dataset 115. The quality of these reconstructed activation maps is found to be comparable with state-of-the-art baselines.

NeuroConText:(a) We train a contrastive model on a large corpus to retrieve a shared latent space between coordinates and text from neuroscientific articles. Pre-trained LLMs are used to obtain an initial text embedding, and a projection layer aligns this embedding with those of coordinates. Snowflakes denote models with frozen weights. (b) A decoder is trained from the text latent space to reproduce brain images from any query, enabling the mapping of queries into brain representations.

Improved priors for inverse problem resolution

Selecting an appropriate prior to compensate for information loss due to the measurement operator is a fundamental challenge in inverse problems. Implicit priors based on denoising neural networks have become central to widely-used frameworks such as Plug-and-Play (PnP) algorithms. During this year, we made several progress to better design implicit priors based on existing denoisers. First, we proposed to enforce equivariance to certain groups of transformations (rotations, reflections, and/or translations) on the denoiser used as implicit priors. This simple procedure strongly improves the stability of the algorithm as well as its reconstruction quality. We derived theoretical insights which highlight the role of equivariance on better performance and stability, and experiments on multiple imaging modalities and denoising networks show numerically these benefits. Then, we introduce Fixed-points of Restoration (FiRe) priors as a new framework for expanding the notion of priors in PnP to general restoration models beyond traditional denoising models. The key insight behind FiRe is that natural images emerge as fixed points of the composition of a degradation operator with the corresponding restoration model. Adopting this fixed-point perspective, we show how various restoration networks can effectively serve as priors for solving inverse problems. Experimental results validate the effectiveness of FiRe across various inverse problems, establishing a new paradigm for incorporating pretrained restoration models into PnP-like algorithms.

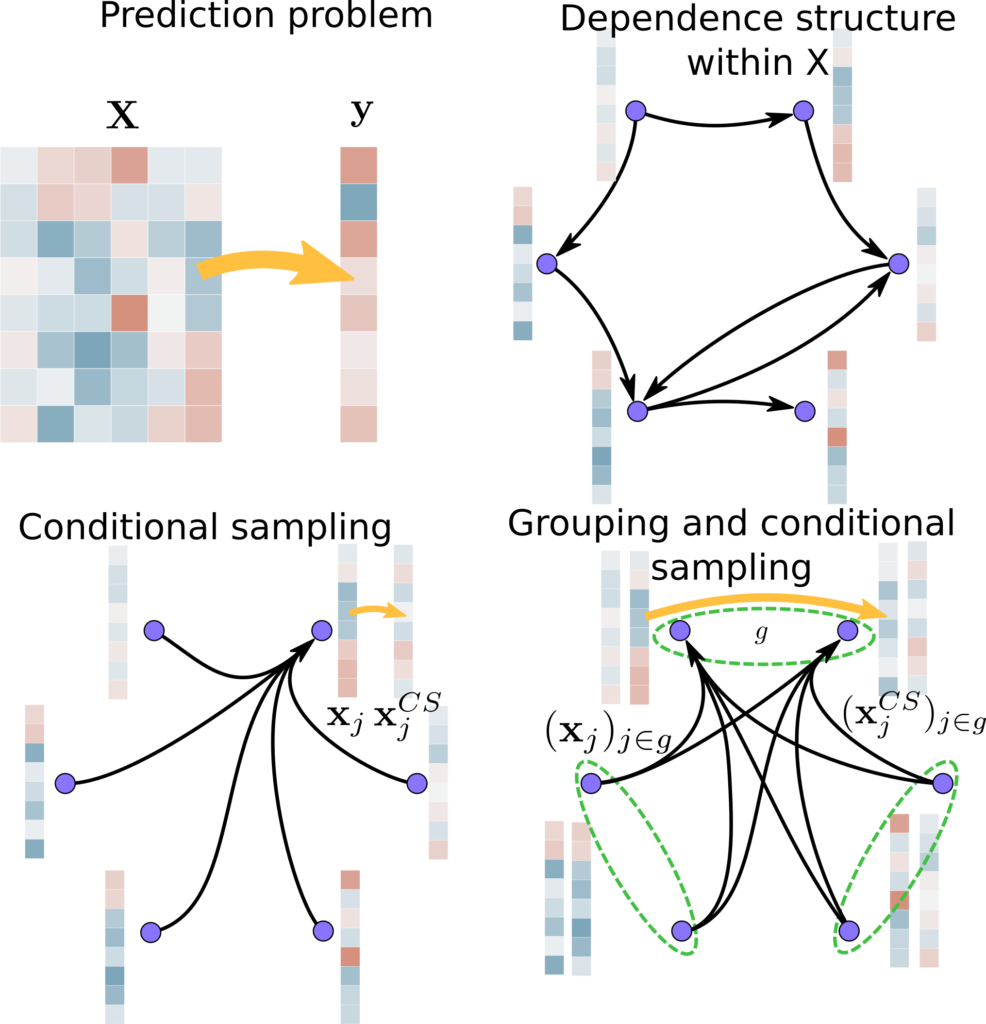

Statistically Valid Variable Importance Assessment through Conditional Permutations

Variable importance assessment has become a crucial step in machine-learning applications when using complex learners, such as deep neural networks, on large-scale data. Removal-based importance assessment is currently the reference approach, particularly when statistical guarantees are sought to justify variable inclusion. It is often implemented with variable permutation schemes. On the flip side, these approaches risk misidentifying unimportant variables as important in the presence of correlations among covariates. Here we develop a systematic approach for studying Conditional Permutation Importance (CPI) that is model agnostic and computationally lean, as well as reusable benchmarks of state-of-the-art variable importance estimators. We show theoretically and empirically that CPI overcomes the limitations of standard permutation importance by providing accurate type-I error control. When used with a deep neural network, CPI consistently showed top accuracy across benchmarks. An experiment on real-world data analysis in a largescale medical dataset showed that CPI provides a more parsimonious selection of statistically significant variables. Our results suggest that CPI can be readily used as drop-in replacement for permutation-based methods.

Performance of Conditional permutation-based vs standard permutation-based variable importance: Performance at detecting important variables on simulated data with

(A): The type-I error quantifies to which extent the rate of low p-values (

(B): The AUC score measures to which extent variables are ranked consistently with the ground truth. Dashed line: targeted type-I error rate. Solid line: chance level.

False Discovery Proportion control for aggregated Knockoffs

Controlled variable selection is an important analytical step in various scientific fields, such as brain imaging or genomics. In these high-dimensional data settings, considering too many variables leads to poor models and high costs, hence the need for statistical guarantees on false positives. Knockoffs are a popular statistical tool for conditional variable selection in high dimension. However, they control for the expected proportion of false discoveries (FDR) and not their actual proportion (FDP). We present a new method, KOPI, that controls the proportion of false discoveries for Knockoff-based inference. The proposed method also relies on a new type of aggregation to address the undesirable randomness associated with classical Knockoff inference. We demonstrate FDP control and substantial power gains over existing Knockoff-based methods in various simulation settings and achieve good sensitivity/specificity tradeoffs on brain imaging and genomic data.

Application of Kopi to cognitive brain imaging.We have employed KOPI on fMRI and genomics data. The aim of fMRI data analysis is to recover relevant brain regions for a given cognitive task as shown below. Here we display brain regions whose activity predicts that the participant is atending to stimuli with social motion.

Clinical biomarkers for epilepsy

Postsurgical seizure freedom in drug-resistant epilepsy (DRE) patients varies from 30% to 80%, implying that in many cases the current approaches fail to fully map the epileptogenic zone (EZ). We aimed to advance a novel approach to better characterize epileptogenicity and investigate whether the EZ encompasses a broader epileptogenic network (EpiNet) beyond the seizure zone (SZ) that exhibits seizure activity. We first used computational modeling to test putative complex systems-driven and systems neuroscience-driven mechanistic biomarkers for epileptogenicity. We then used these biomarkers to extract features from resting-state stereo-electroencephalograms recorded from DRE patients and trained supervised classifiers to localize the SZ against gold standard clinical localization. To further explore the prevalence of pathological features in an extended brain network outside of the clinically identified SZ, we also used unsupervised classification. Supervised SZ classification trained on individual features achieved accuracies of 0.6–.07 area under the receiver operating characteristic curve (AUC).* Combining all criticality and synchrony features further improved the AUC to 0.85. Unsupervised classification discovered an EpiNet-like cluster of brain regions, in which 51% of brain regions were outside of the SZ. Brain regions in the EpiNet-like cluster engaged in interareal hypersynchrony and locally exhibited high-amplitude bistability and excessive inhibition, which was strikingly similar to the high seizure risk regime revealed by our computational modeling. The finding that combining biomarkers improves SZ localization accuracy indicates that the novel mechanistic biomarkers for epileptogenicity employed here yield synergistic information. On the other hand, the discovery of SZ-like brain dynamics outside of the clinically defined SZ provides empirical evidence of an extended pathophysiological EpiNet.

Individual level evidence of differences between seizure zone (SZ) and non-SZ (nSZ). Top: Five minutes of broadband and narrowband traces from (A) an SZ contact and (B) an nSZ contact from the frontal region of a representative subject. Center: Criticality and synchrony assessments differentiated seizure zone (SZ) and non-SZ (nSZ) on the population level using band-collapsed criticality indices. Bottom: Achieving optimal seizure zone (SZ) classification by combining all criticality and synchrony features.

Activity reports

Overall objectives

The Mind team, which finds its origin in the Parietal team, is uniquely equipped to impact the fields of statistical machine learning and artificial intelligence (AI) in service to the understanding of brain structure and function, in both healthy and pathological conditions.

AI with recent progress in statistical machine learning (ML) is currently aiming to revolutionize how experimental science is conducted by using data as the driver of new theoretical insights and scientific hypotheses. Supervised learning and predictive models are then used to assess predictability. We thus face challenging questions like Can cognitive operations be predicted from neural signals? or Can the use of anesthesia be a causal predictor of later cognitive decline or impairment?

To study brain structure and function, cognitive and clinical neuroscientists have access to various neuroimaging techniques. The Mind team specifically relies on non-invasive modalities, notably on one hand, magnetic resonance imaging (MRI) at ultra-high magnetic field to reach high spatial resolution and, on the other hand, electroencephalography (EEG) and magnetoencephalography (MEG), which allow the recording of electric and magnetic activity of neural populations, to follow brain activity in real time. Extracting new neuroscientific knowledge from such neuroimaging data however raises a number of methodological challenges, in particular in inverse problems, statistics and computer science. The Mindproject aims to develop the theory and software technology to study the brain from both cognitive to clinical endpoints using cutting-edge MRI (functional MRI, diffusion weighted MRI) and MEG/EEG data. To uncover the most valuable information from such data, we need to solve a large panoply of inverse problems using a hybrid approach in which machine or deep learning is used in combination with physics-informed constraints.

Once functional imaging data is collected the challenge of statistical analysis becomes apparent. Beyond the standard questions (Where, when and how can statistically significant neural activity be identified?), Mind is particularly interested in addressing driving effect or the cause of such activity in a given cortical region. Answering these basic questions with computer programs requires the development of methodologies built on the latest research on causality, knowledge bases and high-dimensional statistics.

The field of neuroscience is now embracing more open science standards and community efforts to address the referenced to as “replication crisis” as well as the growing complexity of the data analysis pipelines in neuroimaging. The Mindteam is ideally positioned to address these issues from both angles by providing reliable statistical inference schemes as well as open source software that are compliant with international standards.

The impact of Mindwill be driven by the data analysis challenges in neuroscience but also by the fundamental discoveries in neuroscience that presently inspire the development of novel AI algorithms. The Parietal team has proved in the past that this scientific positioning leads to impactful research. Hence, the newly created Mind team formed by computer scientists and statisticians with a deep understanding of the field of neuroscience, from data acquisition to clinical needs, offers a unique opportunity to expand and explore more fully uncharted territories.