by Yihong Xu*, Yutong Ban*, Guillaume Delorme, Chuang Gan, Daniela Rus and Xavier Alameda-Pineda

[arXiv] [paper] [code]

Abstract:

Transformers have proven superior performance for a wide variety of tasks since they were introduced, which has drawn in recent years the attention of the vision community where efforts were made such as image classification and object detection. Despite this wave, building an accurate and efficient multiple-object tracking (MOT) method with transformers is not a trivial task. We argue that the direct application of a transformer architecture with quadratic complexity and insufficient noise-initialized sparse queries – is not optimal for MOT. Inspired by recent research, we propose TransCenter, a transformer-based MOT architecture with dense representations for accurately tracking all the objects while keeping a reasonable runtime. Methodologically, we propose the use of dense image-related multi-scale detection queries produced by an efficient transformer architecture. The queries allow inferring targets’

locations globally and robustly from dense heatmap outputs. In parallel, a set of efficient sparse tracking queries interacting with image features in the TransCenter Decoder to associate object positions through time. TransCenter exhibits remarkable performance improvements and outperforms by a large margin the current state-of-the-art in two standard MOT benchmarks [1] [2] with two tracking

(public/private) settings. The proposed efficient and accurate transformer architecture for MOT is proven with an extensive ablation study, demonstrating its advantage compared to more naive alternatives and concurrent works.

Contributions:

- We introduce TransCenter, the first center-based transformer framework for MOT, which is also among the first to show the benefits of using transformer-based architectures for MOT.

- We carefully explore different network structures to combine the transformer with center representations, specifically proposing dense image-related multi-scale representations that are mutually correlated within the transformer attention and produce abundant but less noisy tracks, while keeping a good computational efficiency.

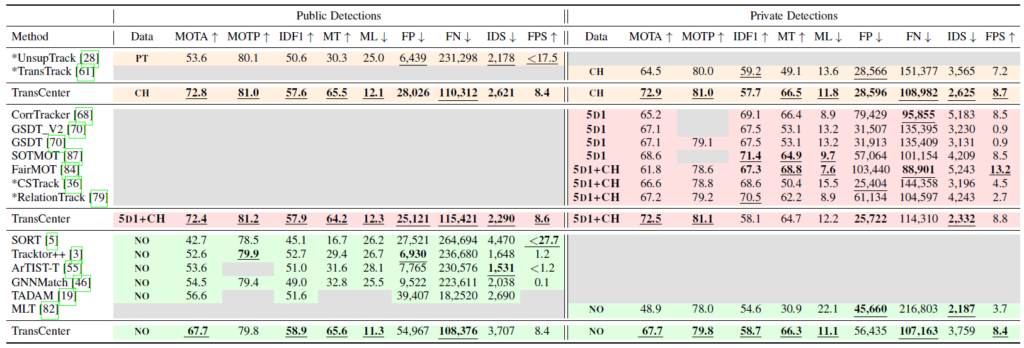

- We extensively compare with up-to-date online MOT tracking methods, TransCenter sets a new state-of-the-art base line both in MOT17 [2] (+4.0% Multiple-Object Tracking Accuracy, MOTA) and MOT20 [1] (+18.8% MOTA) by large margins, leading both MOT competitions by now in the published literature.

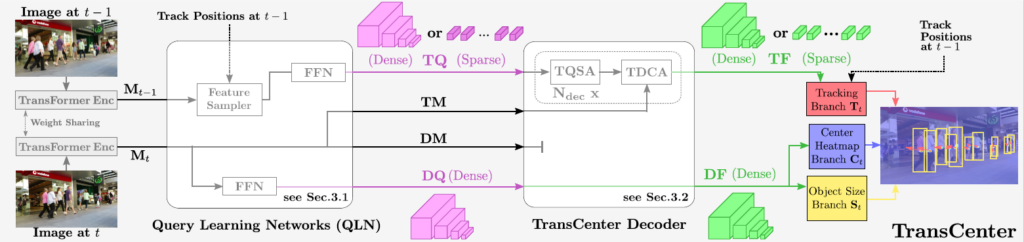

Pipeline:

Generic pipeline of TransCenter and different variants: Images at t and t − 1 are fed to the transformer encoder (DETR-Encoder or PVT-Encoder) to produce multi-scale memories Mt and Mt−1 respectively. They are passed (together with track positions at t − 1) to the Query Learning Networks (QLN) operating in the feature dimension channel. QLN produce (1) dense pixel-level multi-scale detection queries–DQ, (2) detection memory–DM, (3) (sparse or dense) tracking queries–TQ, (4) tracking memory–TM. For associating objects through frames, the TransCenter Decoder performs cross attention between TQ and TM, producing Tracking Features–TF. For detection, the TransCenter Decoder either calculates the cross attention between DQ and DM or directly outputs DQ (in our efficient versions, TransCenter and TransCenter-Lite, see Sec. 3), resulting in Detection Features–DF for the output branches, St and Ct. TF, together with object positions at t − 1 (sparse TQ) or center heatmap Ct-1 (omitted in the figure for simplicity) and DF (dense TQ), are used to estimate image center displacements Tt indicating for each center its displacement in the adjacent frames (red arrows). We detail our choice (TransCenter) of QLN and TransCenter Decoder structures in the figure.

Comparison to the state of the art methods:

Results on MOT17 testset: the left and right halves of the table correspond to public and private detections respectively. The cell background color encodes the amount of extra-training data: green for none, orange for one extra dataset, red for (more than) five extra datasets. Methods with * are not associated to a publication. The best result within the same training conditions (background color) is underlined. The best result among published methods is in bold. Best seen in color.

Qualitative visualizations:

References:

[1] Dendorfer, P., Rezatofighi, H., Milan, A., Shi, J., Cremers, D., Reid, I., Roth, S., Schindler, K. & Leal-Taixé, L. MOT20: A benchmark for multi object tracking in crowded scenes. arXiv:2003.09003[cs], 2020., (arXiv: 2003.09003).

[2] Milan, A., Leal-Taixé, L., Reid, I., Roth, S. & Schindler, K. MOT16: A Benchmark for Multi-Object Tracking. arXiv:1603.00831 [cs], 2016., (arXiv: 1603.00831).