The aim of the Figurine project is to provide a storytelling help for narrative design. A narrator wants to share or to construct a story. It can use objects, characters and decor to set the scene. In order to help the narrator visualizing the scene, real objects are manipulated as tangible interfaces. This allows to use every object as part of the stage set and to personalize the decor. The perception system provides a 3D+time representation of the scene, i.e the position of figurines and stage set in the time, annotated with narrator facial expressions and speech. The final goal of the figurine project is to process this representation in order to produce automatically a 3D movie from the story telling.

The simplicity of this approach allows also children to be narrators and to tell their own stories. The child can imagine his own world, play with the figurines, and then see the movie generated with his creation. More than a playful activity, the generated 3D movie could become a pedagogical help, to support the development of language, social and cognitive skills.

This project is a common project of the IMAGINE team from LJK/Inria and the PRIMA team from LIG/Inria.

Acquisition setup

Scenario

The narrator places itself in front of the recording table. Several stage elements and different figurines are available. It first chooses among them decor items and up to 3 figurines to play with. It them starts to organize the stage at its convenience to tell its story. The system records everything from the augmented figurines, the decor and information about the storyteller(s). In its current configuration, due to space constraint, the system can handle at maximum two simultaneous narrators.

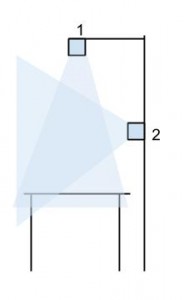

Hardware setup

The hardware is composed with a simple table and two RGB-D sensors. The first one RGB-D is on top of the table. We have chosen a Kinect for Windows device (version 1) as its depth precision is good enough to track moves from the stage and the figurines. The second RGB-D sensor is a Kinect 2. Its performance in body and facial expression tracking outperforms those from the former version. This second sensor is in front of the narrators to record their gestures and faces. The system also records narrator’s speech and detects speech activity within the audio stream to complete facial expression annotation with speech activity.

Figurines and stage set

Figurines are objects augmented with Inertial Measurement Unit (IMU) sensors. These sensors are used to compute orientation of the figurines, and to localize them even if it is not visible from the camera. We used 3D printed figurines with a common base embedding an IMU sensors. Every character can be clipped on this base, so the narrators can even print the characters he wants using a 3D printer.

Several objects can be on the stage. We do not need a highly accurate tracking for these furniture as they do not move a lot. Even everyday objects can be used as stage set element. The top depth camera follows them but as they are not equipped with IMU sensors, they cannot be tracked when they are not visible. In our experiment, we used wooden objects built with a laser cutter.

First results

As shown in the following figure, the recording system is working. One can record a storyteller while playing its story. The system produces the temporal representation of the storytelling session.

We are currently working on integrating these data in the 3D movie generator.

Acknowledgement

This project was supported by Persyval-Lab and Amiqual4Home. We would like to thank Laurence Boissieux from the Inria SED for her help in this project.