Total Variation meets Sparsity: statistical learning with segmenting penalties

Prediction from medical images is a valuable aid to diagnosis. For instance, anatomical MR images can reveal certain disease conditions, while their functional counterparts can predict neuropsychiatric phenotypes. However, a physician will not rely on predictions by black-box models: understanding the anatomical or functional features that underpin decision is critical. Generally, the weight vectors of classifiers are not easily amenable to such an examination: Often there is no apparent structure. Indeed, this is not only a prediction task, but also an inverse problem that calls for adequate regularization. We address this challenge by introducing a convex region-selecting penalty. Our penalty combines total-variation regularization, enforcing spatial contiguity, and 1 regularization, enforcing sparsity, into one group: Voxels are either active with non-zero spatial derivative or zero with inactive spatial derivative. This leads to segmenting contiguous spatial regions (inside which the signal can vary freely) against a background of zeros. Such segmentation of medical images in a target-informed manner is an important analysis tool. On several prediction problems from brain MRI, the penalty shows good segmentation. Given the size of medical images, computational efficiency is key. Keeping this in mind, we contribute an efficient optimization scheme that brings significant computational gains.

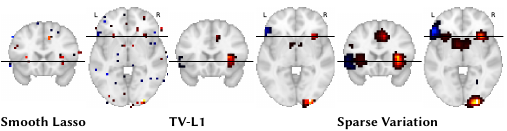

Weight vectors from estimating gain on the mixed gambles task for three sparse methods: Graphnet, TV-l1 and Sparse Variation. This inter- subject analysis shows broader regions of activation. Mean correlation scores on held out data: Graphnet: 0.128, TV-l1 : 0.147, Sparse Variation: 0.149. One can see that both TV-l1 and Sparse Variation regularizations yield more interpretable patterns than Graphnet.

See also our pge on Total Variation minimization in statistical learning.