Overview:

New applications where anyone can broadcast high quality video are becoming very popular. ISPs may take the opportunity to propose new high quality multicast services to their clients. Because of its centralized control plane, Software Defined Networking (SDN) enables the deployment of such a service in a flexible and bandwidth-efficient way. But deploying large scale multicast services on SDN requires smart group membership management and a bandwidth reservation mechanism with QoS guarantees that should neither waste bandwidth nor impact too severely best effort traffic.

In this work, we propose,

- A scalable multicast group management mechanism based on a Network Function Virtualization (NFV) approach for Software Defined ISP networks to implement and deploy multicast services on the network edge,

- The Lazy Load balancing Multicast (L2BM) routing algorithm for sharing the core network capacity in a friendly way between guaranteed-bandwidth multicast traffic and best-effort traffic and that does not require costly real-time monitoring of link utilization.

We have implemented the mechanism and algorithm, and evaluated them both in a simulator and a testbed. In the testbed, we validated the group management at the edge and L2BM in the core with an Open vSwitch based QoS framework. We use simulator to evaluate the performance of L2BM with an exhaustive set of experiments, on various realistic scenarios. The results show that L2BM outperforms other state-of-the art algorithms by being less aggressive with best-effort traffic and accepting about 5-15% more guaranteed-bandwidth multicast join requests.

Scalable Multicast Group Management:

Traditional distributed multicast requires specialized routers capable of managing group membership state information required for protocols like IGMP and PIM. These routers maintain multicast group membership state in their hardware, hence network’s capability of serving number of multicast session is limited by the routers’ specifications. As number of multicast sessions and traffic grows in the network, the problems of scalability and upgrade cost arise.With the advent of SDN, many research proposals are made to shift to in-network multicast group membership management state in the centralized controller of the network. This again poses scalability isssue for centralized controller in case of flood scenarios, where massive number of multicast stream receivers join the network causing IGMP flood towards the centralized controller. We implemented Multicast Network Functions, MNF-H using libfluid(available at GitHub) based light-weight controller for responsible of managing group membership management for downstream ports. It communicates to MNF-N using PIM signals to notify its membership for groups. The PoC code of MNF-H is available here on GitHub. We implemented MNF-N as group membership management module in FloodLight Controller, along with L2BM routing algorithm described next.

Implementation of L2BM algorithm using Floodlight controller

OpenFlow provides programmable Quality-of-Service support through simple queuing mechanism, where one or multiple queues can be attached to the switch ports and OpenFlow can map flows to a specific queue to provide QoS support. However, queue creation and configuration are outside the scope of the OpenFlow protocol. The OpenFlow controller uses the ofp_queue_get_config_request message to retrieve queue information on a specific port. OpenFlow switches reply back with ofp_queue_get_config_reply messages to the controller providing information on the queues mapped to the port.

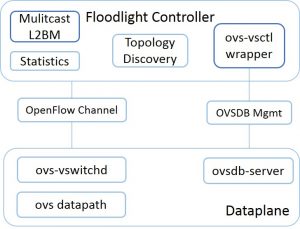

The OpenFlow version 1.3 and later specify the Meter Table feature to program QoS operations. These software switches support traffic policing mechanisms such as rate-limiting, but such mechanisms throttle the bandwidth consumption by dropping packets. So, we opted for the traffic shaping functionality provided by the Linux traffic control system, which allows supporting bandwidth guarantee without loss and we use the ovs-vsctl utility to configure queues in linux traffic control system for each guaranteed-bandwidth flow. we chose to integrate the queue configuration mechanism of OVS using the Floodlight OpenFlow controller as shown in the adjacent figure. OVS provides the ovs-vswitchd actually controls all the software switch instances running on the host. ovs-vsctl utility can configure queues on ports of all the OVS switches instances controlled by the ovs-vswitchd and its configuration database. To avoid writing another OVSDB plugin similar to ovs-vsctl for the controller platform, we implemented a wrapper around ovs-vsctl and added it as a module in the Floodlight Controller. Specifically, we exposed two simple APIs to allocate and de-allocate link capacity on switch port, namely createQueueOnPort and deleteQueueOnPort. This approach creates dependency on ovs-vsctl utility, but it avoids re-implementation of OVSDB management protocol on controller platform.

The specific commit source code of our implementation on our version of Floodlight controller and APIs are available here.

The complete Floodlight controller can be downloaded using “git clone https://github.com/hksoni/SDN-Multicast.git”.

Simulator for L2BM algorithm

Why simulate?

L2BM algorithm is used to spread the guaranteed bandwidth multicast traffic in the network without requiring costly real-time link measurements. The L2BM algorithm is proposed to provide guaranteed multicast routing in core or backbone network of ISPs for service carrying heterogeneous quality of video traffic of standard to ultra high definition quality. These networks usually contain links with very high capacities like 1, 10 or 100 Gbps. The video services on the links may demand 1 to 100Mbs guaranteed-bandwidth for high end-user experience. Hence, the emulation based network experiments of L2BM on testbed for such scenarios are constrained by available physical resource like physical link bandwidth capacity, compute core, disk access bandwidth. However, to study and compare the performance of the algorithm simulators can indeed help.

Traffic Generation:

The L2BM algorithm focuses on peak-hours and flood scenarios, where specific real-world events and prime-time shows from a geographically small part of the backbone networks can suddenly trigger a huge number video streams. This phenomenon creates traffic hotspots in the network. We call this Concentrated Traffic scenario. Another real-world aspects of live video streaming is, end viewers may join and leave live streaming frequently. This generates receiver Churn in the multicast session, thereby the multicast algorithms should consider dynamic join and leave scenarios.

In core network, NFVI-PoPs aggregate a large number of end viewers and manages membership of only NFV-based COs. Hence, the multicast algorithm do not face heavy churn of receiver nodes(NFVI-PoPs) in the network, because of higher likelihood of having an end viewer for the multicast session from the down stream networks covering huge population.

However, mutlicast algorithm routing stream across NFV-based COs in metro network may face more dynamic conditions.

We also consider these two scenarios along with traffic concentration to generate traffic workloads to evaluate and compare L2BM with Nearest Node First(Greedy Heuristic) approach of Dynamic Steiner Tree problem.

The L2BM simulator written in python is available on GitHub at GitHub-L2BM-Simulator.

However, we are working on ipython notebook based interface for easy reproducibility of results.

Experimentation and Evaluation Framework:

SDN-testbed for Experiments:

Testing our bandwidth allocation implementation with Open vSwitches and Floodlight requires a testbed capable of emulating QoS-oriented SDN experiments. Existing emulation tools like Mininet do not consider physical resource constraints, hence the experiments can suffer from errors emerging from testbeds. We have chosen the DiG tool to automate the procedure of building target network topologies while respecting the physical resources constraints available on the testbed. Regarding the network topology, we chose INTERNET2-AL2S to represent an ISP network with 39 nodes and 51 bidirectional edges. Then we virtualized this topology using DiG on the Grid5000 network. DiG implements routers using Open vSwitches running with processing power of two computing cores for seamless packet switching at link rate. As the grid network uses 1Gbps links and INTERNET2-AL2S operates with 100Gbps links, we had to scale down the link capacity to 100Mbps in our experiments.

Virtualizing the Experimental INTERNET2-AL2S Network:

- The DiG uses Docker containers to virtualize switches and hosts using custom built fedora images with pre-installed required tools. It uses Open vSwitch installed fc21-ovs-public docker image to implement routers in the topology. Similarly, hosts are virtualized using the multicast-host docker image.

- The DiG requires topology of INTERNET2-AL2S and grid nodes in DOT graph description language and a mapping of nodes in experimental network to the grid nodes. These files are available here.

- fThe internet2-al2s.dot file describes INTERNET2-AL2S topology.

- The suno.dot file describes grid nodes at sophia-site connected in star topology.

- The mapping-al2s.txt specifies mapping of grid-nodes to virtual INTERNET2-AL2S nodes respecting availability of CPU and bandwidth of grid-nodes..

- We virtualize INTERNET2-AL2S network as specified in the internet2-al2s.dot using DiG.

- Next, we execute floodlight controller integrated with our implementation of guaranteed-bandwidth allocation using ovs-vsctl wrapper, available at this repository.

Results:

We present the results of experiments run with networks 1, 10 and 100Gbps links’ capacities. We used uniform link capacities for each network, because we assume that most core network comprises of uniform capacity links to interconnect NFV-based COs and NFVI-PoPs.

The results are available along with GitHub link of simulator: https://github.com/hksoni/L2BM-Simulator/tree/master/results