|

Yihong Xu defended his Ph.D. thesis on June 8th 2022. Yihong is now a research scientist at Valeo.ai in Paris.

Yihong Xu was Ph.D. student in the RobotLearn (previously Perception) team at INRIA Grenoble since September 2018, directed by Dr. Xavier Alameda-Pineda and co-supervised by Dr. Radu Horaud. He received his engineer’s degree in computer vision from Télécom Bretagne, France (now IMT Atlantique) in 2018. With the mobility grant from the Department for Science and Technology of the French Embassy in Berlin (SST) and INRIA, he was a visiting Ph.D. student at the Dynamic Vision and Learning Group, Technical University of Munich, supervised by Dr. Aljosa Osep and Prof. Dr. Laura Leal-Taixé, conducting research on multiple-object tracking (MOT). Before that, he held a full-time position in 2018 at Meitu, Inc, MTLab, China as a computer vision engineer, working on face alignment and detection. He was also a research intern at Technicolor Research Center at Rennes, studying video style transfer for AR applications. During his Ph.D., he investigated the training strategies and model structures for MOT: he proposed a novel supervised training framework for MOT by approximating the evaluation metrics in a differentiable way; he studied the possibility of using transformer structures for MOT; with his co-authors, he proposed an unsupervised MOT training framework using domain adaptation approaches. In parallel, he worked on some sub-tasks or extended tasks such as unsupervised person re-identification, MOT with audio-visual data, contrastive learning, etc. More details about him can be found from LinkedIn. His publication list can be found in publications and Google scholar. The code for his projects can be found from GitHub. Contact: INRIA Grenoble Rhone-Alpes 655, avenue de l’Europe 38330 Montbonnot Saint-Martin, France Email: yihong dot xu at inria dot fr |

Projects

|

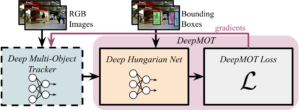

How To Train Your Deep Multi-Object Tracker

Y. Xu, A. Osep, Y. Ban, R. Horaud, L. Leal-Taixé, X. Alameda-Pineda. CVPR, 2020. 170+ citations, 470+ stars on GitHub. |

|

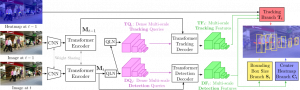

TransCenter: Transformers with Dense Representations for Multiple-Object Tracking

Y. Xu*, Y. Ban*, G. Delorme, C. Gan, D. Rus, X. Alameda-Pineda. TPAMI, 2022. 50+ citations, 80+ stars on GitHub. |

|

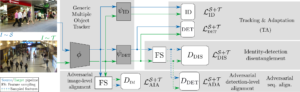

DAUMOT: Domain Adaptation for Unsupervised Multiple-Object Tracking

G. Delorme*, Y. Xu*, L. G. Camara, E. Ricci, R. Horaud, X. Alameda-Pineda. CVIU submission, 2022. 14+ stars on GitHub. [pdf] [page] |

|

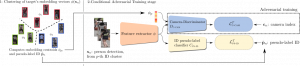

CANU-ReID: A Conditional Adversarial Network for Unsupervised person Re-IDentification

G. Delorme, Y. Xu, S. Lathuilière, R. Horaud, X. Alameda-Pineda. |

|

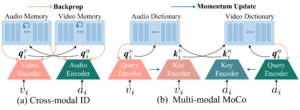

Active Contrastive Set Mining for Robust Audio-Visual Instance Discrimination

H. Xuan, Y. Xu, S. Chen, Z. Wu, J. Yang, Y. Yan, X. Alameda-Pineda |

List of Works During His Ph.D. (Google Scholar)

- Y. Xu, A. Osep, Y. Ban, R. Horaud, L. Leal-Taixé, X. Alameda-Pineda. “How To Train Your Deep Multi-Object Tracker”. CVPR, 2020. [pdf] [project]

- Y. Xu*, Y. Ban*, G. Delorme, C. Gan, D. Rus, X. Alameda-Pineda. “TransCenter: Transformers with Dense Representations for Multiple-Object Tracking”. TPAMI, 2022. [pdf] [project]

- G. Delorme*, Y. Xu*, L. G. Camara, E. Ricci, R. Horaud, X. Alameda-Pineda. “DAUMOT: Domain Adaptation for Unsupervised Multiple-Object Tracking”. CVIU submission, 2022. [pdf] [page]

- G. Delorme, Y. Xu, S. Lathuilière, R. Horaud, X. Alameda-Pineda.”CANU-ReID: A Conditional Adversarial Network for Unsupervised person Re-IDentification”. ICPR, 2021. [pdf] [project]

- H. Xuan, Y. Xu, S. Chen, Z. Wu, J. Yang, Y. Yan, X. Alameda-Pineda. “Active Contrastive Set Mining for Robust Audio-Visual Instance Discrimination”. IJCAI, 2022. [pdf]