|

Abstract: In this paper, we describe experiments with techniques for locating foods and recognizing food states in cooking videos.

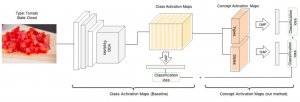

We describe production of a new data set that provides annotated images for food types and food states. We compare results with two techniques for detecting food types and food states, and then show that recognizing type and state with separate classifiers improves recognition results. We then use this to provide detection of composite activation maps for food types. The results provide a promising first step towards construction of narratives for cooking actions.

Architecture:

Dataset: Food objects segmentation of some keyframes of 50 salads dataset are available here. Evaluation Dataset: 50 salads dataset keyframes Total=340 keyframe instant food segmentation. Download here (img_n_annotations_v2.tar).

Paper: CEA18@ECCV18 [paper], [slides]

BibTeX:

|

Pervasive Interaction With Smart Objects and Environments