This year, I am a postdoc researcher at ANIMA team (please visit my new page here)

I finished my PhD with Pervasive team, Oct 2016 – Jun 2020. Under the supervision of Prof. James Crowley and Remi Ronfard, I worked on the recognition of human manipulation actions.

This thesis addresses the problem of recognition, modelling and description of human activities. We describe results on three problems: (1) the use of transfer learning for simultaneous visual recognition of objects and object states, (2) the recognition of manipulation actions from state transitions, and (3) the interpretation of a series of actions and states as events in a predefined story to construct a narrative description.

Internship Proposals:

- Video narration:

-

Key-frame proposals for video understanding:

This project is a continuation of last year’s work funded by NeoVision on key-frame selection for action recognition.

- – Action recognition using state-transformations:

Action recognition has been very rapidly evolving these days. Many different approaches have been proposed for addressing this problem.

Action recognition has been very rapidly evolving these days. Many different approaches have been proposed for addressing this problem.

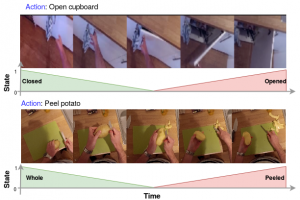

In this project, we are interested in modeling actions to be the cause of object-state transformations. Object states can be more apparent from still images that some actions.

Most techniques models actions directly from images, we propose to use one of these SOA techniques and adapt them to model actions as state transformation.

This project aims to participate in the EPIC-Kitchens action recognition challenge. It requires skills in deep learning and in particular Pytorch.

Publications:

- Aboubakr, Nachwa, James L. Crowley, and Remi Ronfard. “Recognizing Manipulation Actions from State-Transformations.” arXiv preprint arXiv:1906.05147 (2019). Accepted for a presentation in EPIC@CVPR2019. [Project Page]

- Nachwa Aboubakr, Rémi Ronfard, James Crowley. Recognition and Localization of Food in Cooking Videos. CEA/MADiMa’18, Jul 2018, Stockholm, Sweden. 2018, 〈10.1145/3230519.3230590〉. Ground-truth annotation of key-frames in 50 salads dataset. [Project: Project page] [Download evaluation set: annotation_json]

- Nachwa Abou Bakr, James Crowley. Histogram of Oriented Depth Gradients for Action Recognition. ORASIS 2017, Jun 2017, Colleville-sur-Mer, France. pp.1-2, 2017, 〈https://orasis2017.sciencesconf.org/〉. 〈hal-01694733〉