The paper Convexity in ReLU Neural Networks: beyond ICNNs? by Anne Gagneux, Mathurin Massias, Emmanuel Soubies and Remi Gribonval, was accepted for publication at the Journal of Mathematical Image and Vision. https://arxiv.org/abs/2501.03017

In this paper, we develop a toolkit to characterize neural networks implementing convex functions, which play a prominent role in Optimal Transport and Plug and Play methods in imaging. We show that the standard architecture to implement such functions, the so-called Input Convex Neural Networs, are too restrictive : there exist many networks implementing convex functions that are not ICNN. We even show that there are convex functions implemneted by a given network which cannot be implemented by an ICNN with the same architecture.

Jun 20

New paper on convex neural networks accepted at JMIV!

Jun 15

SHARP+Foundry workshop @COLT 2025 in Lyon, June 30th 2025

On June 30th 2025, at ENS de Lyon, in the framework of the COLT2025 conference on learning theory, the SHARP and Foundry projects of the PEPR IA are co-organizing a one-day workshop on “Frugal and Robust Foundations for Machine Learning – Occam’s razor at the age of LLM’s”. The workshop will be dedicated to recent advances in frugal and robust learning. Details here

Jun 15

3 papers @ICML2025, with an oral

The team is happy to announce three accepted works at ICML 2025:

- Selected for an oral presentation: Sibylle Marcotte, Rémi Gribonval, Gabriel Peyré. Transformative or Conservative? Conservation laws for ResNets and Transformers

- Antoine Gonon, Nicolas Brisebarre, Elisa Riccietti, Rémi Gribonval. A rescaling-invariant Lipschitz bound based on path-metrics for modern ReLU network parameterizations

- Antoine Gonon, Léon Zheng, Pascal Carrivain, Quoc-Tung Le. Fast inference with Kronecker-sparse matrices

Jun 15

SSFAM Phd Prize – honorable mention for Antoine Gonon

Antoine Gonon, former Ph.D. student in the Ockham and ARIC teams, is among the laureates of the Ph.D. award of the SSFAM (French-speaking Machine Learning Society) for his thesis on “Harnessing symmetries for modern deep learning challenges: a path-lifting perspective“. Congratulations to Antoine!

Apr 22

SenHub IA Summer school on generative models @Dakar

Mathurin Massias, Quentin Bertrand and Rémi Emonet (INRIA MALICE) had the pleasure to give a 2 day class on generative artificial intelligence during the SenHub IA summer school held in Dakar from April 7th to April 11th.

Our material is available on Github: https://github.com/QB3/SenHubIA2025

Mar 13

2 papers and one blog post accepted at ICLR 2025!

The team is happy to announce two accepted works at ICLR 2025:

– Can Pouliquen, Mathurin Massias, Titouan Vayer: Schur’s Positive-Definite Network: Deep Learning in the SPD cone with structure, https://arxiv.org/abs/2406.09023

– Ségolène Martin, Anne Gagneux, Paul Hagemann, Gabriele Steidl: PnP-Flow: Plug-and-Play Image Restoration with Flow Matching, https://arxiv.org/abs/2410.02423

In addition, the blog post on Flow Matching models written by Anne Gagneux, Ségolène Martin, Rémi Emonet, Quentin Bertrand and Mathurin Massias was accepted at the blog post track. Its quality was greatly appreciated when advertised over social networks. A preprint version is available at https://dl.heeere.com/cfm/

Apr 09

Best poster prize for Anne Gagneux @SMAI MODE

Anne Gagneux, PhD student in the team, won the best poster award at the SMAI MODE days 2024, for her M2 internship on “Automatic and unbiased coefficients clustering with non-convex SLOPE”. The poster is available here.

Congratulations Anne!

Mar 19

One paper accepted at SIAM Journal on Imaging Sciences

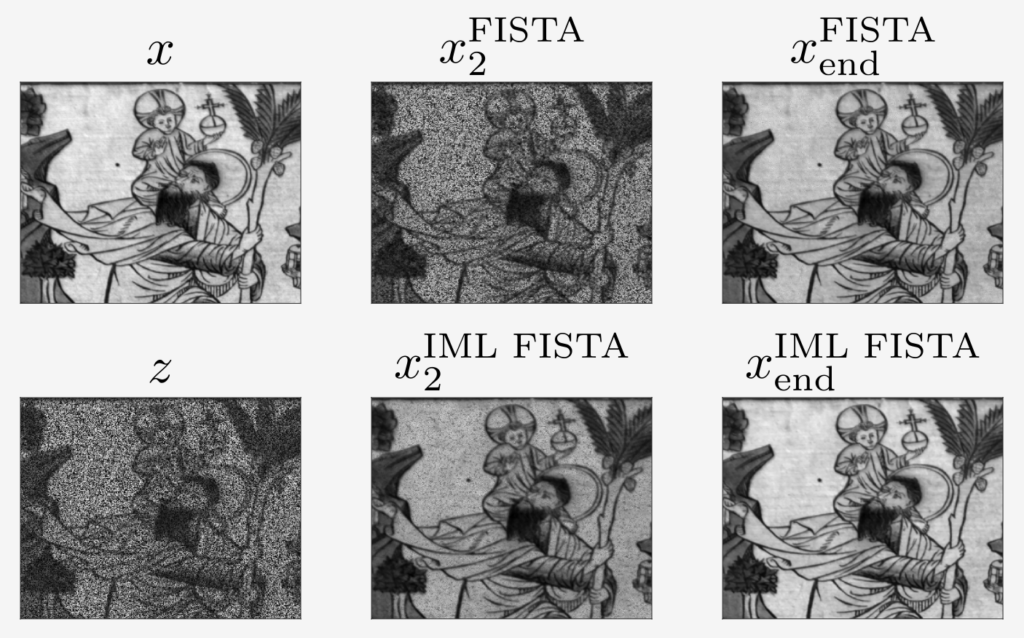

The paper IML FISTA: A Multilevel Framework for Inexact and Inertial Forward-Backward. Application to Image Restoration, by Guillaume Lauga, Elisa Riccietti, Nelly Pustelnik and Paulo Gonçalves, was accepted for publication at SIAM Journal on Imaging Sciences!

This work presents a multilevel framework for inertial and inexact proximal algorithms, that encompasses multilevel versions of classical algorithms such as forward-backward and FISTA.

The methods are supported by strong theoretical guarantees: we prove both the rate of convergence and the convergence of the iterates to a minimum in the convex case, an important result for ill-posed problems.

Several image restoration problems (image deblurring, hyperspectral images reconstruction, etc …) are considered where the proposed multilevel algorithm greatly accelerates the convergence of the one level (i.e. standard) version of the method.

Paper: https://inria.hal.science/hal-04075814

Oct 03

Three papers accepted at NeurIPS 2023

Three papers will be presented this year at NeurIPS in New Orleans.

- Abide by the Law and Follow the Flow: Conservation Laws for Gradient Flows with Sibylle Marcotte, Rémi Gribonval and Gabriel Peyré. Oral presentation.

The purpose of this article to expose the definition and basic properties of “conservation laws”, which are maximal sets of independent quantities conserved during gradient flows of a given model, and to explain how to find the exact number of these quantities by performing finite-dimensional algebraic manipulations on the Lie algebra generated by the Jacobian of the model.

Preprint: https://inria.hal.science/hal-04150576

- Does a sparse ReLU network training problem always admit an optimum? with Quoc-Tung Le, Elisa Riccietti and Rémi Gribonval.

This work shows that optimization problems involving deep networks with certain sparsity patterns do not always have optimal parameters, and that optimization algorithms may then diverge. Via a new topological relation between sparse ReLU neural networks and their linear counterparts, this article derives an algorithm to verify that a given sparsity pattern suffers from this issue.

Preprint: https://inria.hal.science/hal-04108849

- SNEkhorn: Dimension Reduction with Symmetric Entropic Affinities with Hugues Van Assel, Titouan Vayer, Rémi Flamary and Nicolas Courty.

This work uncovers a novel characterization of entropic affinities as an optimal transport problem, allowing a natural symmetrization that can be computed efficiently using dual ascent. The corresponding novel affinity matrix derives advantages from symmetric doubly stochastic normalization in terms of clustering performance, while also effectively controlling the entropy of each row thus making it particularly robust to varying noise levels. Following, it presents a new DR algorithm, SNEkhorn, that leverages this new affinity matrix.

Preprint: https://hal.science/hal-04103326