Le 20 décembre 2023, nous nous retrouverons à partir de 14h dans la salle D308, à l’université Paris Dauphine, pour une après-midi entière où nous aurons le plaisir d’écouter Daniele de Gennaro (Dauphine), Flavien Léger (Inria),Idriss Mazari (Dauphine), Raphaël Prunier (Dauphine),Clément Royer (Dauphine)et Adrien Vacher (Inria).

Daniele de Gennaro (Dauphine)

A stability inequality and asymptotic of geometric flows

In this talk we discuss some recent results we obtained concerning the dynamical stability of some volume-preserving geometric flows in the flat torus: the surface diffusion (SD) and the volume-preserving mean curvature flow (MCF). Motivated by the (formal) gradient-flow structures of the SD and MCF we show that, if the initial set is close enough (in some norm) to a stable set under such flows, the flows exist for every positive time and converge to the subjacent stable set (up to rigid motions). The choice of working in the periodic setting is simply motivated by the wide variety of possible stable sets. The main technical tool is a new stability inequality involving the curvature of the evolving sets.

Flavien Léger

Z-mappings for mathematicians

Slides

Idriss Mazari (Dauphine)

Quantitative inequalities in optimal control theory and convergence of thresholding schemes

We will give an overview of recent progress in the study of quantitative inequalities for optimal control problems. In particular, we will show how they can be used to obtain convergence results for thresholding schemes, which are of great importance in the simulation of optimal control problems. This is a joint work with A. Chambolle and Y. Privat.

Raphaël Prunier (Dauphine)

Clément Royer (Dauphine)

A Newton-type method for strict saddle functions on manifolds

Nonconvex optimization problems arise in many fields of computational mathematics and data science, and are typically viewed as more challenging than convex formulations. In recent years, a number of nonconvex optimization instances have been identified as “benignly” nonconvex, in the sense that they are significantly easier to tackle than generic nonconvex problems. Examples of such problems include nonconvex reformulations of low-rank matrix optimization problems as well as certain formulations of phase retrieval. Interestingly, benign nonconvexity is also common for optimization problems with invariants or symmetries, as is often the case in manifold optimization. In this talk, we will focus on a specific form of benign nonconvexity called the strict saddle property, which characterizes a class of nonconvex optimization problems over Riemannian manifolds. After reviewing this property and providing examples, we will describe a Newton-type framework dedicated to such problems. Our approach can be implemented in an exact or inexact fashion, thanks to recent advances in analyzing Krylov methods in the nonconvex setting. We will present global convergence rates for our algorithm, and discuss how those rates improve over existing analyzes for generic nonconvex problems.

Adrien Vacher

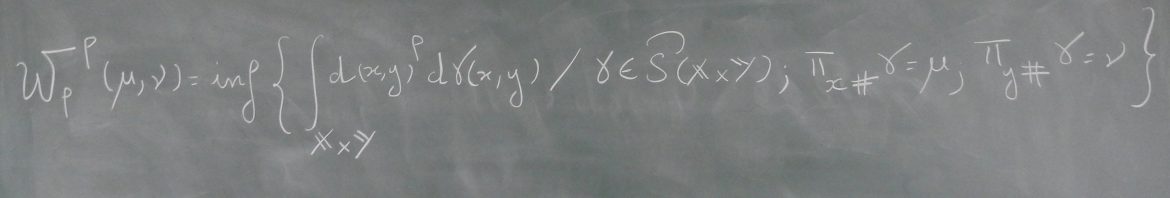

Closing the statistical and computational gap in smooth quadratic OT with kernel SoS

Over the past few years numerous estimators have been proposed to estimate the quadratic OT distance/maps. However, either these estimators can be computed in polynomial time w.r.t the number of samples yet they suffer the curse of dimension either, under suitable smoothness assumptions, they achieve dimension-free statistical rates yet they cannot be computed numerically. After proving that the cost constraint in smooth quadratic OT can be written as a finite sum of squared smooth function, we use the recent machinery of kernel SoS to close this statistical computational gap and we design an estimator of quadratic smooth OT that achieves dimension-free statistical rates and can be computed in polynomial time. Finally, after proving a new stability result on the semi-dual formulation of OT, we show that our estimator recovers minimax rates for the OT map estimator problem.