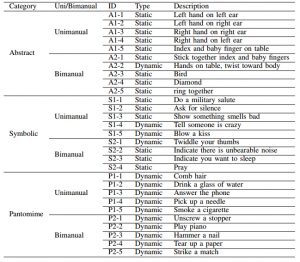

We’ve collected a new challenging RGB-D upper-body gesture dataset recorded by Kinect v2. The dataset is unique in the sense that it addresses the Praxis test, however, it can be utilized to evaluate any other gesture recognition method. The collected dataset consists of selected gestures for Praxis test. There are two types of gestures in the dataset: dynamic (14 gestures) and static (15 gestures) gestures. In the figure below, the dynamic gestures are indicated with red arrows indicating their motion direction. In another taxonomy the gestures are divided to: Abstract, Symbolic and Pantomimes (starting with “A”, “S” and “P” respectively):

List of the gestures, their assigned ID and a short description about them is shown in the figure below.

Each video in the dataset contains all 29 gestures where each one is repeated for 2-3 times depending on the subject. If the subject performs the gesture correctly the test administrator continues to the experiment with the next gesture, otherwise, they repeat it for 1-2 more times. Using the new Kinect v2 we recorded the videos with resolution of RGB: 960×540, depth: 512×424 with human skeletons information. The videos are recorded continuously for each subject. The dataset has a total length of about 830 minutes (with average of 12.7 minutes for each subject). We ask 64 subjects to perform the specified gestures in the gesture set from which 4 are clinicians, 60 are patients. From the subjects, 29 were elderly with normal cognitive functionality, 2 amnestic MCI, 7 unspecified MCI, 2 vascular dementia, 10 mixed dementia, 6 Alzheimer patients, 1 posterior cortical atrophy and 1 corticobasal degeneration. There are also 2 patients with severe cognitive impairment (SCI).

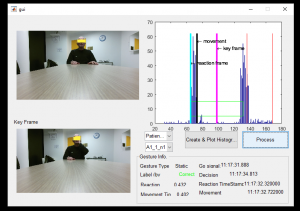

All of the videos are recorded in office environment with fixed position of the camera while subjects sit behind a table where only their upper body is visible. The dataset is composed of fully annotated 29 types of gesture. All of the gestures are recorded with fix ordering, though the repetition of each gesture could be different. There is no time limitation for each gesture which makes the participants to finish their performance naturally. Laterality is important for some of the gestures. Therefore, if these gestures are performed with opposite hand, those are labeled as “incorrect” by the clinician. A 3D animated avatar administrates the experiments. First, she starts with performing each gesture by precisely explaining how the participant should perform it. Next, she asks the participant to perform the gesture by sending a “Go” signal:

Several tools with user-friendly interfaces is also developed to help clinicians to analyze the performances of the subjects including:

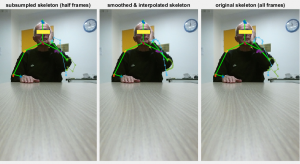

- Trajectory analysis

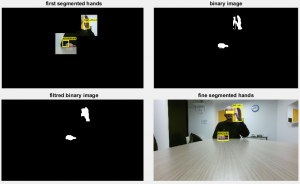

- Hand tracking and depth segmentation tool

- Visualization and performance analysis (detecting reaction and movement times, key frame detection …)

Download:

Dataset request form:

click to download the request form

To access to the dataset please download the form, fill and send it by eMail to : f.negin@gmail.com or farhood.negin@inria.fr

- Dataset (15 GB)

- Code and Tools (will be available soon)

Citation:

Please cite the following paper if using this dataset in your publications:

@article{negin2018praxis,

title={PRAXIS: Towards Automatic Cognitive Assessment Using Gesture Recognition},

author={Negin, Farhood and Rodriguez, Pau and Koperski, Michal and Kerboua, Adlen and Gonz{\`a}lez, Jordi and Bourgeois, Jeremy and Chapoulie, Emmanuelle and Robert, Philippe and Bremond, Francois},

journal={Expert Systems with Applications},

year={2018},

publisher={Elsevier}

}

https://doi.org/10.1016/j.eswa.2018.03.063

People:

- Farhood Negin (farhood.negin at inria dot fr)

- Jeremy Bourgeois (jeremy.bourgeois at unice dot fr)

- Emmanuelle Chapoulie (emmanuelle.chapoulie at gmail dot com)

- Philippe Robert (probert at unice dot fr)

- Francois Bremond francois.bremond at inria dot fr