Catégorie : Video understanding

(English) Object tracking in SUP

(English) Object tracking description

Désolé, cet article est seulement disponible en Anglais Américain.

ViSEvAl result comparison

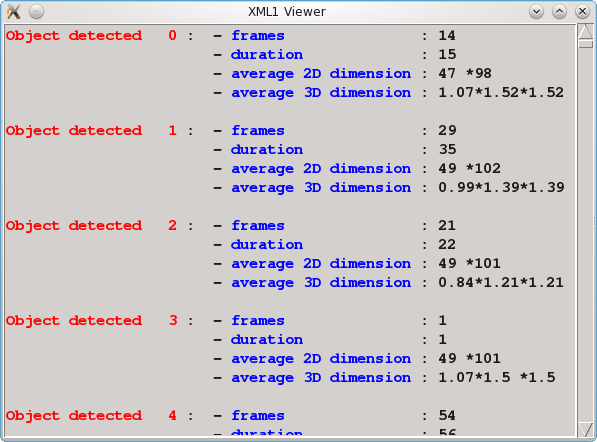

XML1 Viewer

(English) Multi-sensors fusion for daily living activities recognition in older people domain

I am currently researching the use of Information and Communication Technologies as tools for preventative care and diagnosis support in elderly population . Our current approach uses accelerometers and video sensors for the recognition of instrumental daily living activities (IADL, e.g., preparing coffee, making a phone call). Clinical studies have pointed…

(English) ScReK tool

Evaluation quotidienne de SUP

Désolé, cet article est seulement disponible en Anglais Américain.

(English) ViSEvAl software

ViSEvAl is under GNU Affero General Public License (AGPL) At INRIA, an evaluation framework has been developed to assess the performance of Gerontechnologies and Videosurveillance. This framework aims at better understanding the added values of new technologies for home-care monitoring and other services. This platform is available to the scientific…

(English) MOG segmentation

Désolé, cet article est seulement disponible en Anglais Américain.

(English) Object tracking in SUP

(English) Object tracking description

Désolé, cet article est seulement disponible en Anglais Américain.

ViSEvAl result comparison

XML1 Viewer

(English) Multi-sensors fusion for daily living activities recognition in older people domain

I am currently researching the use of Information and Communication Technologies as tools for preventative care and diagnosis support in elderly population . Our current approach uses accelerometers and video sensors for the recognition of instrumental daily living activities (IADL, e.g., preparing coffee, making a phone call). Clinical studies have pointed…

(English) ScReK tool

Evaluation quotidienne de SUP

Désolé, cet article est seulement disponible en Anglais Américain.

(English) ViSEvAl software

ViSEvAl is under GNU Affero General Public License (AGPL) At INRIA, an evaluation framework has been developed to assess the performance of Gerontechnologies and Videosurveillance. This framework aims at better understanding the added values of new technologies for home-care monitoring and other services. This platform is available to the scientific…

(English) MOG segmentation

Désolé, cet article est seulement disponible en Anglais Américain.