Overview

This document presents the artifact for the submitted journal article “CertiCAN: Certifying CAN Analyses and Their Results”. The theories and tools presented in the paper have been formally specified using the Prosa library and verified using the Coq proof assistant. Therefore, the correctness of proofs can be automatically and mechanically verified. It is possible to reproduce all results presented in the paper following the instructions below.

The rest of this document is structured as follows:

- Summary

- Installation

- Checking the correctness of proofs

- Locating the specifications and the main theorems

- Experimental evaluation

I. Summary

The article introduces CertiCAN, a tool produced using the Coq proof assistant for the formal certification of CAN analysis results. Result certification is a process that is light-weight and flexible compared to tool certification, which makes it a practical choice for industrial purposes.

The analysis underlying CertiCAN, which is based on a combined use of two well-known CAN analysis techniques, is computationally efficient. Experiments demonstrate that CertiCAN is faster than the corresponding certified combined analysis. More importantly, it is able to certify the results of RTaW-Pegase, an industrial CAN analysis tool, even for large systems. This result paves the way for a broader acceptance of formal tools for the certification of real-time systems analysis results.

II. Installation

In order to compile and mechanically check our proofs, the Coq proof assistant and the Mathematical Components library must be installed. For this, we recommend using our VM image for the artifact evaluation, which provides an operating system Ubuntu 18.04.5 and all required tools (eg. Ocaml, Coq, Coqide …). The Coq and OCaml sources as well as test files can be found in the Desktop directory.

To boot the VM image you must:

- Download and install VirtualBox

- Download our packaged VM image file

- Double-click on the downloaded VM image file certican.ova and then choose the Import button (before importing the VM, you can set the size of memory, 4Gb by default.).

If it does not work, please check the size of memory and disk reserved for the VM. It requires at least 4Gb of memory and 20Gb of disk. - Start the VM (user name and password are both: certican)

By default, the keyboard layout is set as English QWERTY, you can change it by using the command setxkbmap if you like (e.g., setxkbmap fr for a French layout).

Manual installation (Skip if you choose to use the VM)

If you prefer to install the environment in your system, please install the following versions:

and our proofs as well as other related files can be downloaded here.

If you would like to use Coqide to read our Coq files (*.v), please set “Edit -> Preferences -> Project -> project file” as “_CoqProject_coqide” inside coqide GUI.

III. Checking the correctness of proofs

In the following, we suppose that you use the VM and are in the directory /home/certican/Desktop/certican-v2.

The correctness of our proofs can be automatically checked by running the following commands in Terminal.

First of all, you need to access the directory code-source (Recall: In the VM, the complete path should be /home/certican/Desktop/certican-v2/code-source); Then,

1) Remove binary files if there exists

$ make clean

2) Compile (-j4 means using 4 threads, you can change this number). During the compilation, there may be some warnings about the deprecated definitions, e.g., perm_eq_sym, hints etc. At the end of the compilation, there will be some extraction warnings.

$ make -j4

3) Verify the proofs

$ make validate

This command will compile the proofs to generate compiled binary files (*.vo) then run coqchk, a stand alone Coq verifier.

After a few minutes, you will obtain the following output:

| CONTEXT SUMMARY ===============* Theory: Set is predicative* Axioms: Coq.ssr.ssreflect.Under_eq.Under_eq mathcomp.ssreflect.finfun.FinfunDef.finfunE mathcomp.ssreflect.fintype.SubsetDef.subsetEdef Coq.ssr.ssreflect.Under_iff.Under_iff Coq.ssr.ssreflect.Under_eq.Over_eq Coq.ssr.ssreflect.Under_eq.Under_eq_from_eq mathcomp.ssreflect.fintype.Finite.EnumDef.enumDef Coq.ssr.ssreflect.Under_eq.under_eq_done mathcomp.ssreflect.tuple.FinTuple.enumP Coq.ssr.ssreflect.Under_iff.over_iff_done mathcomp.ssreflect.tuple.FinTuple.enum mathcomp.ssreflect.bigop.BigOp.bigopE mathcomp.ssreflect.tuple.FinTuple.size_enum mathcomp.ssreflect.finfun.FinfunDef.finfun Coq.ssr.ssreflect.Under_iff.Over_iff mathcomp.ssreflect.fintype.CardDef.card mathcomp.ssreflect.fintype.CardDef.cardEdef Coq.ssr.ssreflect.Under_eq.over_eq mathcomp.ssreflect.bigop.BigOp.bigop mathcomp.ssreflect.fintype.SubsetDef.subset Coq.ssr.ssreflect.Under_eq.over_eq_done Coq.ssr.ssreflect.Under_iff.under_iff_done mathcomp.ssreflect.fintype.Finite.EnumDef.enum Coq.ssr.ssreflect.Under_iff.over_iff Coq.ssr.ssreflect.Under_iff.Under_iff_from_iff |

In the output, there are a list of axioms, i.e., mathcomp.ssreflect.* and Coq.ssr.ssreflect.*: files from the Mathematical Components library. Inside these files, defined axioms are not real axioms but interfaces used in Module system.

Our files (starting with rt.*) do not use any other axiom. This means that our proofs are complete and correct.

Note that the Coq verifier coqchk only checks the logical information i.e. type, syntax and proof etc. One still need to manually check that specifications and the main theorems are indeed what they are supposed to be.

IV. Reading the Coq specification

This section describes the basics of a Coq formalization and relates some definitions and lemmas to their presentation in the article.

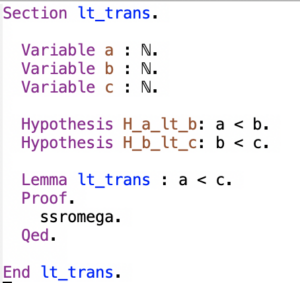

First of all, in order to read the Coq specification, we need to understand the section-style proof structure used in the Prosa library. Consider the following example:

We prove the transitivity of the operation ‘lt’ for naturel numbers. For any 3 naturel numbers: a, b, and c, assume that a < b and b < c, then we can prove that a < c. The ellipsis between ‘Proof’. And ‘Qed.’ represents the proof scripts for this lemma that will be mechanically checked by Coq.

Secondly, let us briefly recall some basic definitions:

- the time (instant, duration) is defined by naturel numbers as defined in time definitions.

- the type of job and task is defined by eqType that is a type with decidable equality

The arrival sequence is defined by a function: time -> seq Job as follows (or in arrival sequence definition )

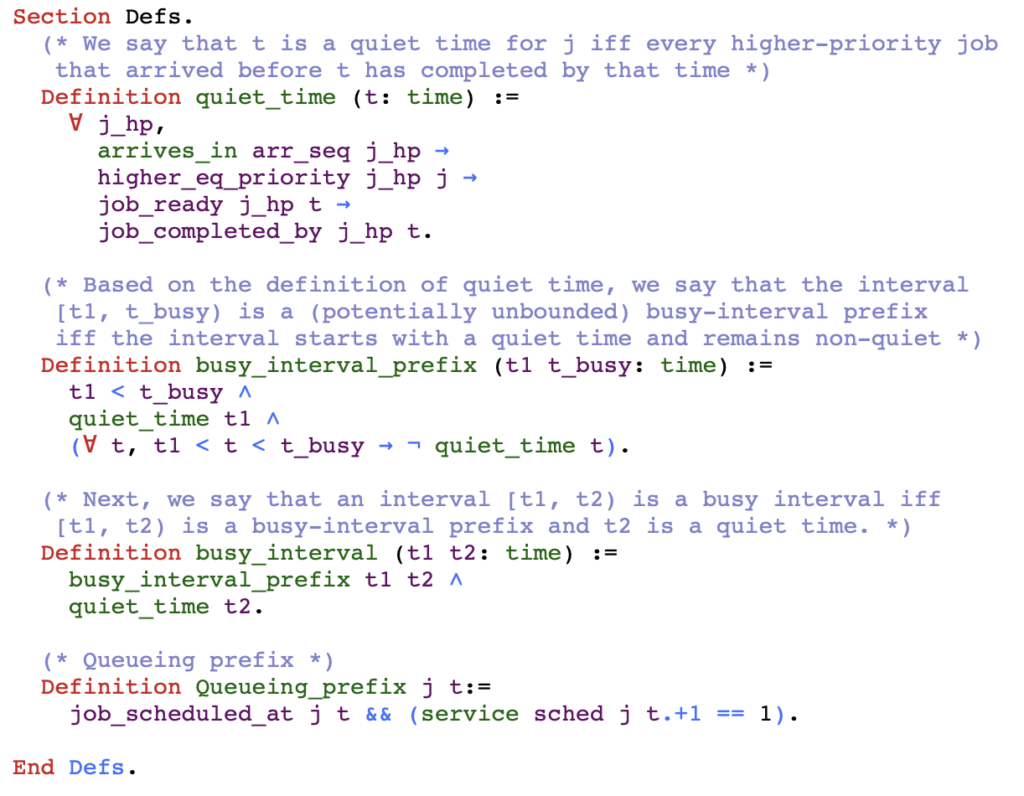

The level-k quiet time and busy window related to Definition 1 and 2 in the article are defined as follows (or in [Coq definitions])

The next section provides links to other main Coq definitions and lemmas.

V. Locating the specifications and the main theorems

Our proofs are built on top of the Prosa library, therefore the basic specifications (e.g. arrival sequence, schedule, busy window… etc.) have been defined in Prosa and manually checked. In addition, we have also introduced a few specifications for model of transactions with tasks with offsets and the FPNP scheduling policy.

In the following, we provide links to the main notions and theorems used in our development. Whenever a specification is from Prosa, we mark it explicitly.

V.1. System behavior

Arrival sequences (AS) (from Prosa)

Definition: A valid arrival sequence respects:

Schedule (from Prosa)

Definition: A valid schedule respects:

Definition of a work-conserving schedule

V.2. Task model: Transaction with offsets

A transaction is defined as a list of tasks which

- are periodic with offsets

- share the same local clock (i.e., respect transaction model)

V.3. Scheduling policy

Definition of the FPNP scheduling policy

Jobs from the same task execute in FIFO order

V.4. Workload bound function

Definition of the workload bound function for a single task

Proof of correctness of the workload bound within a busy window related to the precise analysis

Proof of correctness of the workload bound within a queueing prefix related to the precise analysis

Proof of correctness of the workload bound within a busy window related to the approximate analysis

Proof of correctness of the workload bound within a queueing prefix related to the approximate analysis

Proof of correctness of the workload bound within a busy window related to the combined analysis (refined scenario)

Proof of correctness of the workload bound within a queueing prefix related to the combined analysis (refined scenario)

V.5. Analysis

Busy window

Definition of busy interval (from Prosa)

Definition of queueing prefix

Response time analysis

Proof of correctness of the generic response time analysis by abstracting the type of candidates

Proof of correctness of the precise analysis

Proof of correctness of the approximate analysis

Proof of correctness of the implementation for computing the precise result

Proof of correctness of the implementation for computing the approximate result

Algorithm of the dominant candidates

Proof of correctness of the dominant candidates

Definition of the discontinued points

Proof of correctness of the discontinued points

Algorithm of the combined analysis

Proof of correctness of the combined analysis with optimization

Algorithm of the result certification (CertiCAN)

Proof of correctness of the CertiCAN with optimization

VI. Experimental evaluation

After having completed the proofs, we can use the Coq extraction technique to automatically generate three Ocaml tools ( an approximate analyzer, a combined analyzer, and CertiCAN).

They can be found in the files CAN_analyzer_offset.ml(i) in the directory certican-v2/extracted-tools. They contain the following functions:

- approximate_analyzer (AA): the analyzer based on the approximate analysis

- combined_analyzer (CA): the analyzer based on the combined analysis

- certican: the certifier of CAN analysis results (response times)

These files can also be re-extracted by executing ExtractionTools.v inside certican-v2/code-source. The new files will be generated in the directory certican-v2/code-source/analysis/uni/offset. Note that in order to record the evaluated scenarios during our experimentations, we manually added a variable count_exact in the files CAN_analyzer_offset.ml(i) which are stored in extracted-tools/with-counter.

The Coq extraction technique is used for building certified functional programs by extracting them from the verified Coq functions or specifications.

The experiment folder contains the two directories:

- example/: it contains an example for testing these extracted tools.

- exp-in-thesis/: it contains all necessary files for our experiments evaluated in the paper.

VI.1 Example

An example for testing extracted tools.

- System.ml: a system to analyze.

- rtaw_results/: it contains the results for this system computed by RTaW-Pegase. Note that this system is translated to System.csv to adapt Pegase input format. rtaw_runtime.txt contains the runtime of Pegase for analyzing this system, System_WorstCaseAnalysis.csv contains the analysis results, and rtaw_analysis_results_unit_second.txt only contains the response times of tasks in that system with the same order as in System.ml.

- certified_results/: it is used for collecting the results computed by the extracted tools.

Execute the following bash file to test them:

$ ./test_system.sh

This command compiles the *.ml and *.mli files then calls the four different tools to analyze the system from System.ml.

After a few seconds, the results will be computed and stored in certified_results/info.txt:

| #System | #scanrios

precise analyzer |

runtime(s)

combined analyzer |

#scanrios

combined analyzer |

runtime(s)

appro analyzer |

#scanrios

appro analyzer |

runtime(s)

certican |

#scanrios

certican |

certified

result |

|

| 1 |

1183 | 0.184 |

989 |

0.148 |

585 |

0.144 |

585 |

true* |

0 |

*: When the result to certify is greater than or equal to the result computed by the combined analyzer, certican returns true; otherwise false. In this example, certican certifies the results computed by Pegase (it is stored in rtaw_analysis_results_unit_second.txt)

Meanwhile, two files (appro_results.txt, combined_results.txt) containing the computed results (note that in these files, the time unit is millisecond) have been generated in the same directory (certified_results/).

Interested readers can modify system parameters in System.ml or Pegase results in rtaw_analysis_results_unit_second.txt to test these tools.

VI.2 Experimentation

In exp-in-thesis/ecu7-15:

- NETCARBENCH-config/: it contains the configuration for the NETCARBENCH generator

- xml-systems-generated-NETCARBENCH/: it contains all generated systems with xml format (set*.xml), which are translated to the right formats for extracted tools and for Pegase in

- systems/: it contains set*.ml for extracted tools and set*.csv for Pegase

- tools/: it contains extracted tools and a bash script for compiling these tools

- results/: it is used to collect the computed results

- appro/ for approximate analyzer

- combined/ for the combined analyzer

- figure/ containing a python program for drawing the figures presented in the paper

- rtaw/ containing the results computed by Pegase

- rtaw_runtime.txt containing the runtime of Pegase for analyzing each system

- all_results.txt containing the results (runtime and evaluated scenarios for each system) with the same format as in the table presented in V.1 Example

Execute the following bash file (in tools/) to start the experiment:

$ ./analyzers.sh

This command will take a couple of hours (depending on your computer performance) for evaluating 3000 systems.

After the previous command is terminated, go to the results/figure/ folder then execute

$ python3 figures.py

The corresponding figures would appear in the same folder.

The command /experiment/sup-exp/runAll.sh reproduces the experiments of Table 3, The results are writtent in the four /tool-… /results/statistics.txt files.

If you have any question about this artifact, please contact pascal dot fradet at inria dot fr