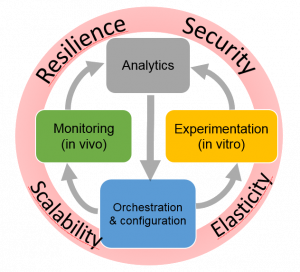

RESIST is structured into four main research objectives namely Monitoring, Experimentation, Analytics and Orchestration

Context

The increasing number of components (users, applications, services, devices) involved in today’s Internet as well as their diversity make the Internet a very dynamic environment. Networks and cloud data centers have been becoming vital elements and an integral part of emerging 5G infrastructure. Indeed, networks continue to play their role interconnecting devices and systems, and clouds are now the de facto technology for hosting services, and for deploying storage and compute resources, and even Network Functions (NFs). While telecom operators have been historically providing Internet connectivity and managing the Internet infrastructure and services, they are now loosing control to other stakeholders, particularly to Over-the-Top (OTT) content and service providers. Therefore, the delivery of Internet services has increased in complexity to mainly cope with the diversity and exponential growth of network traffic both at the core and at the edge. Intermediate players are multiplying and each of them has been proposing solutions to enhance service access performance. In the Internet landscape, no single entity can claim a complete view of Internet topology and resources. Similarly, a single authority cannot control all interconnection networks and cloud data centers to effectively manage them and provide reliable and secure services to end users and devices at scale. The lack of clear visibility into Internet operations is exacerbated by the increasing use of encryption solutions 1 which contributes to traffic opacity.

Monitoring

The evolving nature of the Internet ecosystem and its continuous growth in size and heterogeneity call for a better understanding of its characteristics, limitations, and dynamics, both locally and globally so as to improve application and protocol design, detect and correct anomalous behaviors, and guarantee performance. To face these scalability issues, from which knowledge about Internet traffic and usage can be extracted. Measuring and collecting traces necessitate user-centered and data-driven paradigms to cover the wide scope of heterogeneous user activities and perceptions. In this perspective, we propose monitoring algorithms and architectures for large scale environments involving mobile and Internet of Things (IoT) devices. also assesses , for example the need for dedicated measurement methodologies. We take into account not only the technological specifics of such paradigms for their monitoring but also the ability to use them for collecting, storing and processing monitoring data in an accurate and cost-effective manner. Crowd-sourcing and third-party involvement are gaining in popularity, paving the way for massively distributed and collaborative monitoring. We thus investigate opportunistic mobile crowdsensing in order to collect user activity logs along with contextual information (social, demographic, professional) to effectively measure end-users’ . However, collaborative monitoring raises serious concerns regarding trust and sensitive data sharing (open data). Data anonymization and sanitization need to be carefully addressed.

Experimentation

Of paramount importance in our target research context is experimental validation using testbeds, simulators and emulators. In addition to using various existing experimentation methodologies, RESIST contributes in , particularly focusing on elasticity and resilience. We develop and deploy testbeds and emulators for such as SDN and NFV, to enable large-scale in-vitro experiments combining all aspects of Software-Defined Infrastructures (server virtualization, SDN/NFV, storage). Such fully controlled environments are particularly suitable for our experiments on resilience, as they ease the management of fault injection features. We are playing a central role in the development of the Grid’5000 testbed and our objective is to reinforce our collaborations with other testbeds, towards a in order to enable experiments to scale to multiple testbeds, providing a diverse environment reflecting the Internet itself. Moreover, our research focuses on extending the infrastructure virtualization capabilities of our Distem emulator, which provides a flexible software-based experimental environment. Finally, methodological aspects are also important for ensuring , and raises many challenges regarding testbed design, experiment description and orchestration, along with automated or assisted provenance data collection

Analytics

A large volume of data is processed as part of the operations and management of networked systems. These include traditional monitoring data generated by network components and components’ configuration data, but also data generated by dedicated network and system probes. requires the elaboration of novel capable to cope with large volumes of data generated from various sources, in various formats, possibly incomplete, non-fully described or even encrypted. We use machine learning techniques (*e.g.* Topological Data Analysis or multilayer perceptrons) and leverage our domain knowledge to fine-tune them. For instance, machine learning on network data requires the definition of new distance metrics capable to capture the properties of network configurations, packets and flows similarly to edge detection in image processing. RESIST contributes to developing and making publicly available an to support Intelligence-Defined Networked Systems. Specifically, the goal of the analytics framework is to facilitate the extraction of knowledge useful for . The extracted knowledge is then leveraged for orchestration purposes to achieve system elasticity and guarantee its resilience. Indeed, predicting when, where and how issues will occur is very helpful in deciding the provisioning of resources at the right time and place. Resource provisioning can be done either reactively to solve the issues or proactively to prepare the networked system for absorbing the incident (resiliency) in a timely manner thanks to its elasticity. While the current trend is towards centralization where the collected data is exported to the cloud for processing, we seek to extend this model by also developing and evaluating novel approaches in which . This combination of big data analytics with network softwarization enablers (SDN, NFV) can enhance the scalability of the monitoring and analytics infrastructure.

Orchestration

The ongoing transformations in the Internet ecosystem including network softwarization and cloudification bring new management challenges in terms of service and resource orchestration. Indeed, the growing sophistication of Internet applications and the complexity of services deployed to support them require novel models, architectures and algorithms for their automated and . Network applications are more and more instantiated through the , that are offered by and are subject to changes and updates over time. In this dynamic context, efficient orchestration becomes fundamental for ensuring performance, resilience and security of such applications. We are investigating the [chaining of different functions]{} for supporting the security protection of smart devices, based on the networking behavior of their applications. From a resilience viewpoint, this orchestration at the network level allows the dynamic to absorb the effects of congestions, such as link-flooding behaviors. The goal is to drastically reduce the effects of these congestions by imposing dynamic policies on all traffic where the network will adapt itself until it reaches a stable state. We also explore mechanisms for within a virtualized network. Corrective operations can be performed through dynamically composed VNFs (Virtualized Network Functions) based on available resources, their dependencies (horizontal and vertical), and target service constraints. We also conduct research on [verification methods for automatically assessing and validating the composed chains]{}. From a security viewpoint, this orchestration provides that capture adversaries’ intentions early and in advance through the available resources, to be able to proactively mitigate their attacks. We mainly rely on the results obtained in our research activity on security analytics to build such policies, and the orchestration part focuses on the required algorithms and methods for their automation.