You can go directly to the videos and the publications.

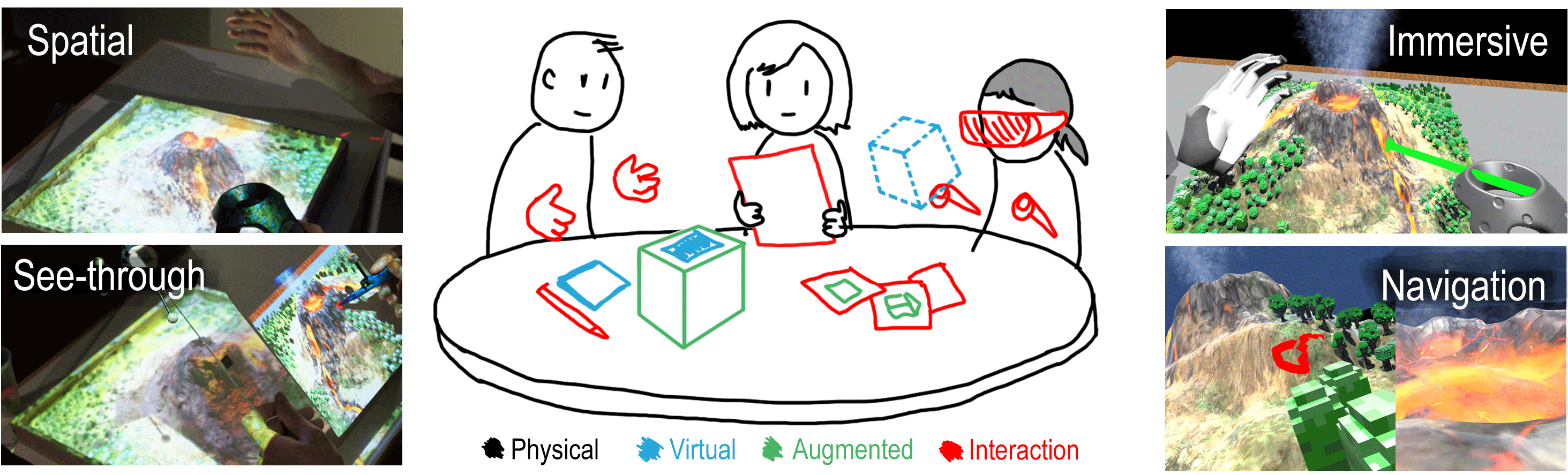

This project explores the combination of Physical and Virtual Reality through the usage of Mixed Reality. Early explorations involved the usage of Spatial Augmented Reality in combination with Virtual Reality, two technologies with complementary characteristics that evolved separately in the past. Spatial Augmented Reality (SAR) augments the environment using projectors or screens, without the need of user instrumentation. By keeping a single unified frame of reference, it supports social interaction and natural perception of the space, but the augmentation is limited by physical constraints (e.g., it requires a surface to display information). Immersive head mounted displays on the other hand are not limited by the physical properties of the environment, yet they isolate the user from their environment.

By providing a unified frame of reference for both SAR and immersive displays, the users can select the visualization that is best suited for a given task. This enables both asymmetric collaboration between users, and back-and-forths for a single user.

These explorations were followed by the combination of additional modalities, in an incremental fashion. This way, one or more users can chose the desired modalities, and immerse themselves as much as the task requires. As a result, the virtual world can be framed in relationship with the physical one.

The combination of physical and virtual spaces could benefit multiple applications, particularly in the context of asymmetric collaboration. Complex industrial environments, such as the aerospace industry, already use Virtual Reality to ease the conception and iteration of ideas. Yet, the decisions involved are usually taken by experts discussing in physical meeting rooms. We explored the possibility of bridge these two spaces in order to ease their awareness and communication capabilities, by building on our previous work.

Currently, our efforts are focused on the understanding of the human capabilities to interact with these heterogeneous representations, with some very promising results.

Videos

Publications

Joan Sol Roo, Jean Basset, Pierre-Antoine Cinquin, Martin Hachet, Understanding Users’ Capability to Transfer Information between Mixed and Virtual Reality: Position Estimation across Modalities and Perspectives CHI ’18 – Conference on Human Factors in Computing System, Apr 2018, Montreal, Canada.

Joan Sol Roo, Martin Hachet, One Reality: Augmenting How the Physical World is Experienced by combining Multiple Mixed Reality Modalities UIST 2017 – 30th ACM Symposium on User Interface Software and Technology, Oct 2017, Quebec City, Canada. 2017, ACM UIST’17.

Damien Clergeaud, Joan Sol Roo, Martin Hachet, Pascal Guitton, Towards Seamless Interaction between Physical and Virtual Locations for Asymmetric CollaborationVRST 2017 – 23rd ACM Symposium on Virtual Reality Software and Technology, Nov 2017, Gothemburg, Sweden.

Joan Sol Roo, Martin Hachet. Towards a Hybrid Space Combining Spatial Augmented Reality and Virtual Reality. 3DUI’17, IEEE Symposium on 3D User Interfaces, March 2017, California, USA. 2017. http://3dui.org/. (Best tech-note award)