Team seminars are called MokaMeetings. They are organized on a monthly basis. Subscribe to the mailing list to be notified about upcoming seminars.

The list of past seminars is available here.

- Mokameeting du 11 juin 2025 : Guillaume Garrigos (Université Paris Cité, LPSM) et Mathurin Massias (Inria Lyon, OCKHAM)

Guillaume Garrigos

Titre : SGD et pas de Polyak stochastique : analyse d’un algorithme frauduleux mais prometteur

Abstract : Le choix du pas de temps pour la méthode du gradient stochastique est crucial, que ce soit pour son analyse théorique ou lors d’une implémentation en pratique. En théorie il est souvent nécessaire de choisir le pas en fonction de paramètres du problème supposés connus (régularité de la fonction, distance entre l’initialisation et la solution, etc). En pratique on choisit le pas parmi une liste de candidats sur une grille, ce qui est couteux en temps. Les méthodes dites adaptives cherchent à se débarasser de ces deux problèmes, en fournissant des règles pour choisir le pas qui dépendent le moins possible des propriétés du problème. C’est le cadre de cet exposé: nous proposons d’étudier une version stochastique du “pas de Polyak”, qui est une formule proposée par Polyak pour définir le pas en fonction des valeurs connues à l’itéré courant. Nous allons voir que ce choix s’avère très puissant, permettant d’obtenir des vitesses de convergence optimales (en un certain sens) que ce soit dans le cadre lisse ou non lisse, le tout sans connaitre aucune des constantes classiques associées au problème. Evidemment il y a un hic: pour pouvoir implémenter cet algorithme, l’utilisateur doit connaitre une nouvelle quantité aussi difficile à atteindre que résoudre le problème lui-même! Nous discuterons alors de certains cas particuliers pour lesquels cette quantité est triviale (régime dit de l’interpolation), ainsi que d’autres dans lequel elle peut être raisonablement approchée.

Mathurin Massias

Titre : Expectations vs reality : on the role of stochasticity in generalization of flow matching

Abstract : Modern deep generative models can now produce high-quality synthetic samples that are often indistinguishable from real training data. A growing body of research aims to understand why recent methods – such as diffusion and flow matching techniques – generalize so effectively. Among the proposed explanations are the inductive biases of deep learning architectures and the stochastic nature of the conditional flow matching loss. In this work, we rule out the latter – the noisy nature of the loss – as a primary contributor to generalization in flow matching. First, we empirically show that in high-dimensional settings, the stochastic and closed-form versions of the flow matching loss yield nearly equivalent losses. Then, using state-of-the-art flow matching models on standard image datasets, we demonstrate that both variants achieve comparable statistical performance, with the surprising observation that using the closed-form can even improve performance.

- Mokameeting du 9 avril 2025 : Jean-Jacques Godeme (Inria Sophia, Laboratoire Jean-Dieudonne)

Titre : Phase Retrieval using Bregman-based Geometry.

Abstract : In this work, we investigate the phase retrieval problem of real-valued signals in finite dimension, a challenge encountered in various scientific and engineering disciplines. We explore two complementary approaches: retrieval with and without regularization. In both settings, our work is focused on relaxing the Lipschitz continuity hypothesis generally required by first-order splitting algorithms, and which is not valid for phase retrieval cast as a least-square problem. The key idea here is to replace the Euclidean geometry by a non-Euclidean Bregman geometry using an appropriate kernel. We use a Bregman gradient/mirror descent algorithm with this divergence to solve the phase retrieval problem without regularization, and we show exact (up to a global sign) recovery both in a deterministic setting and with high probability for a sufficient number of random measurements: Laplacian measurements (Fréchet naming) and Coded Diffraction Patterns (Fourier modulated with Laplacian random matrix). Moreover, we have proved the stability of this approach against small additive noise. Turning to phase retrieval with regularization, we first develop and analyze an Inertial Bregman Proximal Gradient algorithm for minimizing the sum of two functions in finite dimension, one of which is convex and possibly nonsmooth and the second is relatively smooth in the Bregman geometry. We provide both global and local convergence guarantees for this algorithm. Finally, we study noisy and stable recovery of low-complexity phase retrieval with regularization. For this, we formulate the problem as the minimization of an objective functional involving a nonconvex smooth data fidelity term and a convex regularizer promoting solutions conforming to some notion of low-complexity related to their nonsmooth points. We establish conditions for exact and stable recovery and provide sample complexity bounds for random measurements to ensure that these conditions hold. These sample bounds depend on the intrinsic dimension of the signals to be recovered. Our new results allow us to go far beyond the case of sparse phase retrieval.

- Mokameeting du 5 février 2025 : Aymeric Baradat (Lyon 1) et Grégoire Loeper (BNP)

Le prochain mokameeting se tiendra à l’université Paris Dauphine le mercredi 5 février à 14h dans la salle P308 (attention, lieu inhabituel !).

Aymeric Baradat

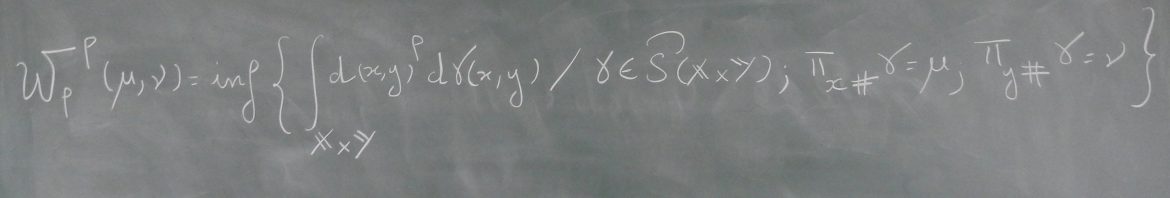

Entropic JKO scheme for the Muskat problemRésumé : The Muskat problem is a system of PDEs describing the evolution of two incompressible and immiscible fluids of different densities under the action of gravitation. When the heavier fluid stands above the lighter fluid, the so-called Saffman-Taylor instability makes the system ill-posed in Sobolev spaces. Yet, Otto proposed in the late 90s to study a relaxation of this problem by interpreting it as a Wasserstein gradient flow and then by considering the limit of the corresponding JKO scheme as the time-step goes to zero. This procedure allowed him to draw a link between the Muskat problem and the entropic solutions of one-dimensional conservation laws. In this talk, I will show that an entropic version of the JKO scheme is particularly well adapted to this problem. On the practical side, it can be efficiently computed using a very simple Sinkhorn algorithm. On the theoretical side, it converges towards the solution of a well-posed system, shedding light on Otto’s connection with conservation laws. This is a work in progress with Sofiane Cher

Grégoire Loeper

Black and Scholes, Legendre and SinkhornRésumé : This talk will be a unified overview of some recent and less recent contributions in derivatives pricing. The financial topics are option pricing with market impact and model calibration. The mathematical tools are fully non-linear partial differential equations and martingale optimal transport. Some new and fun (?) results will be a Black-Scholes-Legendre formula for option pricing with market impact, a Measure Preserving Martingale Sinkhorn algorithm for martingale optimal transport, a new point of view on the Bass Martingale, and some algorithms for exact local volatility calibration.