Kino Ai is a joint research project of the IMAGINE team at Inria Grenoble Alpes, and the Performance Lab at Univ. Grenoble Alpes. Following our previous work in “multiclip video editing” and “Split Screen Video Generation”, we are working to provide a user-friendly environment for editing and watching ultra-high definition movies online, with an emphasis on recordings of live performances.

Kino Ai makes it possible to generate movies procedurally from existing footage of live performances.

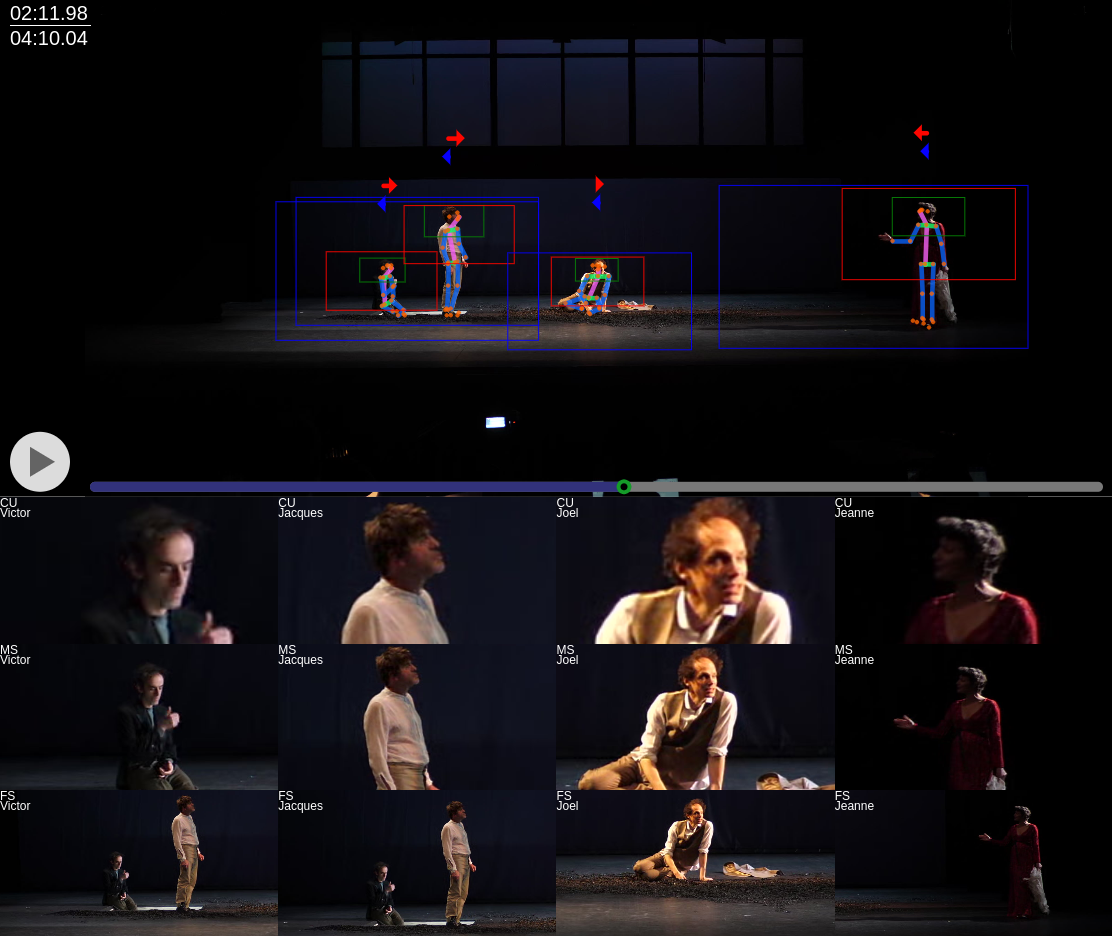

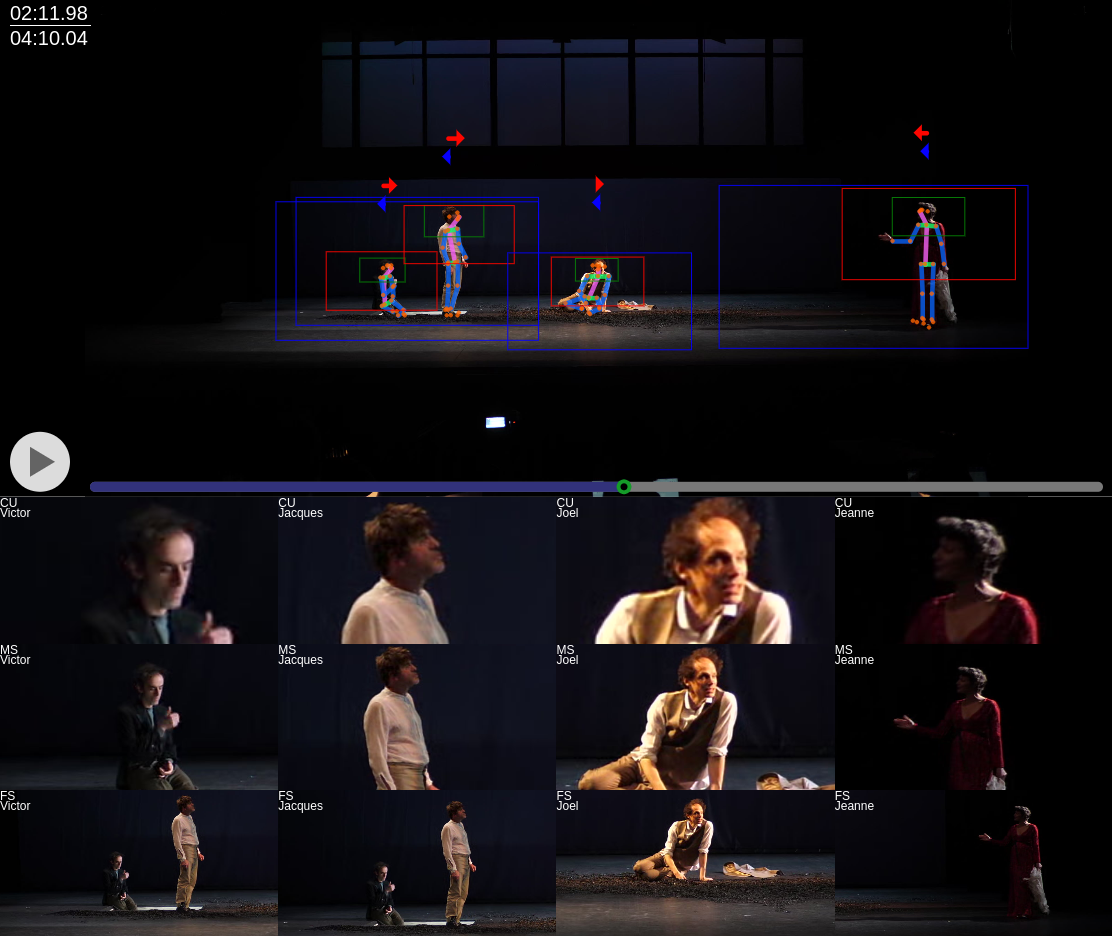

We use artificial intelligence for tracking and recognizing actors in the video, computing aesthetically pleasing virtual camera movements, generating a variety of interesting cinematographic rushes from a single video source, and editing them together into movies.

Our methods have been extensively tested during the rehearsals of “La fabrique des monstres”, a stage adaptation of Mary Shelley’s Frankenstein which was created by director Jean-Francois Peyret at Theatre Vidy in Lausanne in January 2018.

Three short documentaries using cinematographic rushes created by Kino Ai have been published inline in October 2018.

The next steps in this long-term project will be (1) to make those techniques avaible on-line, using a dedicated movie browser, allowing a remote user to compose complex, dynamaic split-screen or multi-screen compositions along a traditional timeline interface; (2) to develop new algorithms for automatically editing the cinematographic rushes into movies based on a semantic analysis of the play-script, the video recording and the audio track, taking into account user preferences; and (3) to produce more examples of procedurally generated movies useful for teaching and researching the creative process at work in theatre and dance performances and rehearsals.

References:

Vineet Gandhi, Rémi Ronfard, Michael Gleicher. Multi-Clip Video Editing from a Single Viewpoint. CVMP 2014 – European Conference on Visual Media Production, London, United Kingdom, Nov. 2014.

Rémi Ronfard, Benoit Encelle, Nicolas Sauret, Pierre-Antoine Champin, Thomas Steiner, et al.. Capturing and Indexing Rehearsals: The Design and Usage of a Digital Archive of Performing Arts. Digital Heritage, Granada, Spain, Sept. 2015.

Moneish Kumar, Vineet Gandhi, Rémi Ronfard, Michael Gleicher. Zooming On All Actors: Automatic Focus+Context Split Screen Video Generation. Computer Graphics Forum, Wiley, May 2017.

Joseph David. Répétitions en cours. La fabrique des Monstres. Episode 1, Dec. 2017 (In French).

Aude Fourel. Répétitions en cours. La fabrique des monstres. Episode 2 , Feb. 2018 ( in French).

Maella Mickaelle. Répétitions en cours. La fabrique des monstres. Episode 3, June 2018 (in French).