Algorithmic differentiation in high-performance computing: challenges and opportunities in optimisation,uncertainty quantification, and machine learning

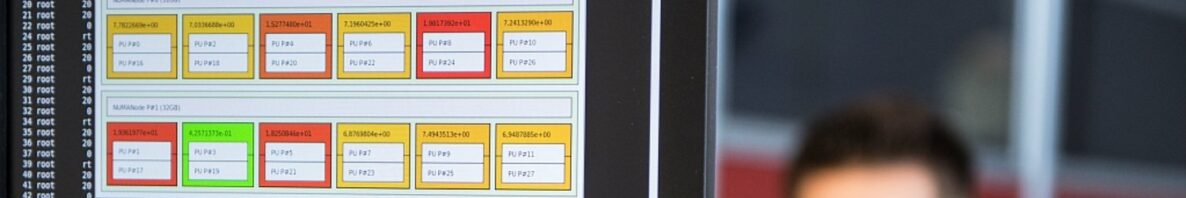

Gradients are useful in countless applications, e.g. gradient-based shape optimisation in structural dynamics, adjoint methods in weather forecasting, or the training of neural networks. Algorithmic differentiation (AD) is a technique to efficiently compute gradients of computer programs, and has undergone decades of development. This talk will give a brief overview of AD techniques, and highlight some of the challenges that arise in the differentiation of code written for modern computer architectures such as multi-core and many-core processors, and the differentiation of high-level languages such as C++ or Python. The talk will also show some recent developments in the differentiation of shared-memory parallel fluid dynamics solvers for Intel Xeon Phi accelerators.

—

The talk will be in Room Alan Turing 2 at 10 am.