(English) Multi-sensors fusion for daily living activities recognition in older people domain

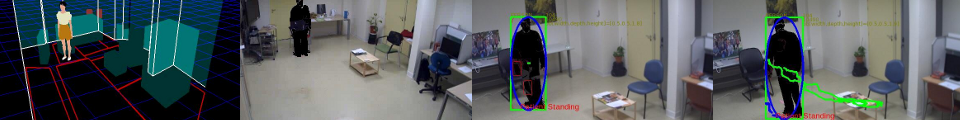

I am currently researching the use of Information and Communication Technologies as tools for preventative care and diagnosis support in elderly population . Our current approach uses accelerometers and video sensors for the recognition of instrumental daily living activities (IADL, e.g., preparing coffee, making a phone call). Clinical studies have pointed…