Acoustic Space Learning for Sound-Source Separation and Localization on Binaural Manifolds

Antoine Deleforge, Florence Forbes, and Radu Horaud

International Journal of Neural Systems, 25 (1), 2015

PDF on arXiv | BibTeX | HAL | Additional papers | Matlab Code | Dataset | Videos and more

Abstract

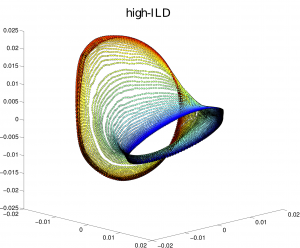

| In this paper we address the problems of modeling the acoustic space generated by a full-spectrum sound source and of using the learned model for the localization and separation of multiple sources that simultaneously emit sparse-spectrum sounds. We lay theoretical and methodological grounds in order to introduce the binaural manifold paradigm. We perform an in-depth study of the latent low-dimensional structure of the high-dimensional interaural spectral data, based on a corpus recorded with a human-like audiomotor robot head. A non-linear dimensionality reduction technique is used to show that these data lie on a two-dimensional (2D) smooth manifold parameterized by the motor states of the listener, or equivalently, the sound source directions. We propose a probabilistic piecewise affine mapping model (PPAM) specifically designed to deal with high-dimensional data exhibiting an intrinsic piecewise linear structure. We derive a closed-form expectation-maximization (EM) procedure for estimating the model parameters, followed by Bayes inversion for obtaining the full posterior density function of a sound source direction. We extend this solution to deal with missing data and redundancy in real world spectrograms, and hence for 2D localization of natural sound sources such as speech. We further generalize the model to the challenging case of multiple sound sources and we propose a variational EM framework. The associated algorithm, referred to as variational EM for source separation and localization (VESSL) yields a Bayesian estimation of the 2D locations and time-frequency masks of all the sources. Comparisons of the proposed approach with several existing methods reveal that the combination of acoustic-space learning with Bayesian inference enables our method to outperform state-of-the-art methods. |

|

Code

All the results presented in this paper can be reproduced using the Supervised Binaural Mapping Matlab Toolbox, which includes the algorithm PPAM and the algorithm VESSL.

Additional References

A. Deleforge, R. Horaud, Y. Schechner, L. Girin, “Co-Localization of Audio Sources in Images Using Binaural Features and Locally-Linear Regression”, IEEE/ACM Transactions in Audio, Speech and Language Processing, 2015.

Antoine Deleforge; Florence Forbes; Radu Horaud. Variational EM for Binaural Sound-Source Separation and Localization. ICASSP 2013 – 38th International Conference on Acoustics, Speech, and Signal Processing, May 2013, Vancouver, Canada. IEEE, pp. 76-80

Antoine Deleforge; Radu Horaud. 2D Sound-Source Localization on the Binaural Manifold. MLSP 2012 – IEEE Workshop on Machine Learning for Signal Processing, Sep 2012, Santander, Spain. IEEE, pp. 1-6

Antoine Deleforge; Radu Horaud. The Cocktail Party Robot: Sound Source Separation and Localisation with an Active Binaural Head. HRI 2012 – 7th ACM/IEEE International Conference on Human Robot Interaction, Mar 2012, Boston, United States. ACM, pp. 431-438

Antoine Deleforge; Radu Horaud. A Latently Constrained Mixture Model for Audio Source Separation and Localization. 10th International Conference on Latent Variable Analysis and Signal Separation, Mar 2012, Tel Aviv, Israel. Springer, 7191, pp. 372-379, Lecture Notes in Computer Science