New paper on Deep Learning fo Visual Servoing accepted at ICRA’18

New paper on Deep Learning fo Visual Servoing accepted at ICRA’18

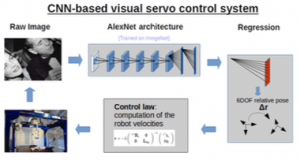

Q. Bateux, E. Marchand, J. Leitner, F. Chaumette, P. Corke. Training Deep Neural Networks for Visual Servoing. In IEEE Int. Conf. on Robotics and Automation, ICRA’18, Brisbane, Australia, May 2018.

We proposed a deep neural network-based method to perform high-precision, robust and real-time 6 DOF positioning tasks by visual servoing. We show that a convolutional neural network can be fine-tuned to estimate the relative pose between the current and desired images and a pose-based visual servoing control law is considered to reach the desired pose. We also considered how to efficiently and automatically create a dataset used to train the network. We also show that the proposed the training of a scene-agnostic network by feeding in both the desired and current images into a deep network.