Efficient Extended Environments

Summary

The emergence of immersion tools such as Head-Mounted Displays (HMDs) and haptic interfaces makes it possible to envisage the creation of virtual or augmented environments that can be used to study the physical activity of interacting humans in an efficient manner. In particular, sports applications of this type raise a number of scientific questions, including the quality of immersion, the fidelity of physical interactions with the environment, and the evaluation of training methods using this paradigm (skills training), in particular regarding the biases integrated into the perception-action loop (perception of one’s body, shift in sensory stimuli, etc.). Such questions may also be developed for other applications (ergonomics, clinics).

Long term scientific objective

The emergence and accelerating maturation of virtual and augmented reality technologies are opening up enormous application possibilities for the study of physical activity. These include sports, where there are many potential uses for analysis and training, ergonomics, where these tools can be used to design workstations or products to test their impact in terms of physical risk, and disability, where they can be

used in controlled learning or rehabilitation situations. All these applications raise major scientific issues: firstly, how to ensure that what happens in the mixed environment is transferable to the real world (or the biomechanical fidelity of interactions in the mixed environment). This question requires us to consider both the interaction tools (multisensory feedback) and the population of the virtual environment (realistic humans, realistic collaborators, etc.). It is also necessary to understand the possible levers for disrupting the sensory-motor loop in order to improve the subject’s performance or learning in a given situation. Finally, we need to be able to analyse physical activity quickly enough to provide feedback to the subject in real-time, which raises a number of questions about the means of capture, the precision/performance trade-offs for the calculations made, and the methods for providing feedback to the subject.

Short term goals and actions

We are currently working on the following goals:

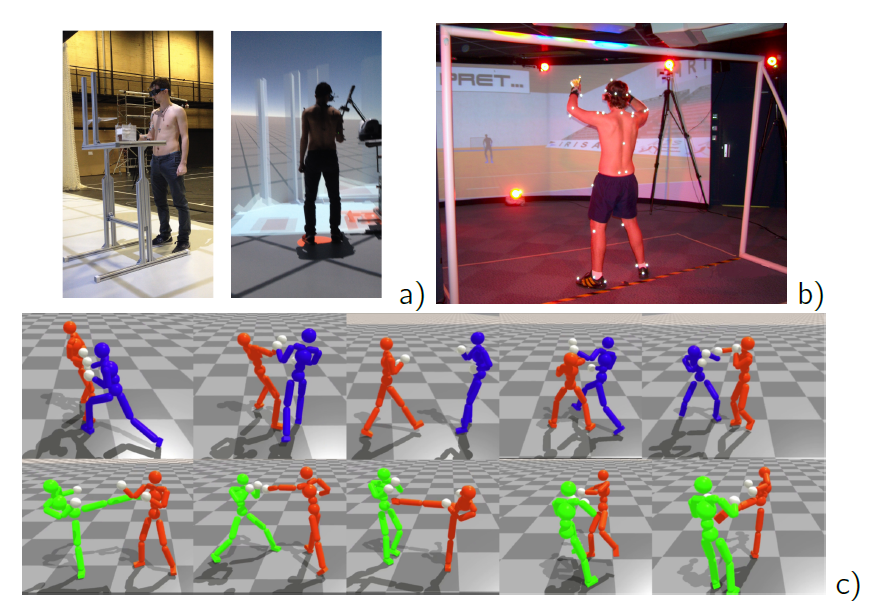

- Biomechanical assessment of the locomotion in a manual wheelchair through a virtual environment: Within the frame of the ANR CAPACITIES project, we are currently assessing the biomechanical constraints related to the crossing of representative environmental barriers (slope, turns,…) to classify the accessibility of cities. To do so, we use pre-recorded courses that we play in a virtual environment, with the subject using an instrumented wheelchair as an ergometer (a robotic system emulating the behavior of the wheelchair). This environment is evaluated in terms of fidelity and immersion, as well as interaction with the ergometer, to ensure that the results are transferable to real-life situations.

- Performance Optimization using Virtual Reality: The PIA PPR REVEA project proposes a new generation of innovative and complementary training methods and frameworks to increase the number of medals at Paris 2024 Olympic Games, thanks to VR. It indeed offers standardization, reproductibility and control features that: 1) densify and vary the training for very high performance without increasing the associated physical loads, and by reducing the risk of impact and/or high-intensity exercises; 2) offer injured athletes the opportunity to continue training during their recovery period; 3) provide an objective and quantified assessment of athletes’ performance and progress; and 4) offer a very varied training that allows a better retention of learning and adaptability of athletes via a proper biofeedback.

- Embodied Social Experiences in Hybrid Shared Spaces: within the European HORIZON-CL4-2022-HUMAN-01-14 “ShareSpace” project, we explore how hybrid environments mixing real users and AI-driven characters could help to train collaborative skills, such as managing the attack in cycling pelotons. The idea is to identify available biomechanical variables that prepare for an attack, and to train partners to pick-up visual cues to anticipate this attack, using amplifiers on the identified above biomechanial variables using VR and AR and biofeedback cues.

- Model the physical interaction between real and virtual agents in mixed reality applications: based on the PhD thesis of Mohamed Younes, a new PhD student funded by the PEPR eNSEMBLE PC3 “MATCHING” project, will explore how to model long-term and continuous physical collaboration between humans. Previous works have shown the interest of deep reinforcement learning to imitate short-term physical interactions between humans, when simulating several virtual humans. We now wish to explore how to extend to continuous and long term collaborations involving forces and physical interactions between a real and a virtual human.

Axis leader

Participants